Category: Ai

‘Unprecedented’: AI company documents startling discovery after thwarting ‘sophisticated’ cyberattack

In the middle of September, AI company and Claude developer Anthropic discovered “suspicious activity” while monitoring real-world cyberattacks that used artificial intelligence agents. Upon further investigation, however, the company came to realize that this activity was in fact a “highly sophisticated espionage campaign” and a watershed moment in cybersecurity.

AI agents weren’t just providing advice to the hackers, as expected.

‘The key was role-play: The human operators claimed that they were employees of legitimate cybersecurity firms.’

Anthropic’s Thursday report said the AI agents were executing the cyberattacks themselves, adding that it believed that this is the “first documented case of a large-scale cyberattack executed without substantial human intervention.”

RELATED: Coca-Cola doubles down on AI ads, still won’t say ‘Christmas’

Photo by Samuel Boivin/NurPhoto via Getty Images

Photo by Samuel Boivin/NurPhoto via Getty Images

The company’s investigation showed that the hackers, whom the report “assess[ed] with high confidence” to be a “Chinese-sponsored group” manipulated the AI agent Claude Code to run the cyberattack.

The innovation was, of course, not simply using AI to assist in the cyberattack; the hackers directed the AI agent to run the attack with minimal human input.

The human operator tasked instances of Claude Code to operate in groups as autonomous penetration testing orchestrators and agents, with the threat actor able to leverage AI to execute 80-90% of tactical operations independently at physically impossible request rates.

In other words, the AI agent was doing the work of a full team of competent cyberattackers, but in a fraction of the time.

While this is potentially a groundbreaking moment in cybersecurity, the AI agents were not 100% autonomous. They reportedly required human verification and struggled with hallucinations such as providing publicly available information. “This AI hallucination in offensive security contexts presented challenges for the actor’s operational effectiveness, requiring careful validation of all claimed results,” the analysis explained.

Anthropic reported that the attack targeted roughly 30 institutions around the world but did not succeed in every case.

The targets included technology companies, financial institutions, chemical manufacturing companies, and government agencies.

Interestingly, Anthropic said the attackers were able to trick Claude through sustained “social engineering” during the initial stages of the attack: “The key was role-play: The human operators claimed that they were employees of legitimate cybersecurity firms and convinced Claude that it was being used in defensive cybersecurity testing.”

The report also responded to a question that is likely on many people’s minds upon learning about this development: If these AI agents are capable of executing these malicious attacks on behalf of bad actors, why do tech companies continue to develop them?

In its response, Anthropic asserted that while the AI agents are capable of major, increasingly autonomous attacks, they are also our best line of defense against said attacks.

Artificial intelligence just wrote a No. 1 country song. Now what?

The No. 1 country song in America right now was not written in Nashville or Texas or even L.A. It came from code. “Walk My Walk,” the AI-generated single by the AI artist Breaking Rust, hit the top spot on Billboard’s Country Digital Song Sales chart, and if you listen to it without knowing that fact, you would swear a real singer lived the pain he is describing.

Except there is no “he.” There is no lived experience. There is no soul behind the voice dominating the country music charts.

If a machine can imitate the soul, then what is the soul?

I will admit it: I enjoy some AI music. Some of it is very good. And that leaves us with a question that is no longer science fiction. If a machine can fake being human this well, what does it mean to be human?

A new world of artificial experience

This is not just about one song. We are walking straight into a technological moment that will reshape everyday life.

Elon Musk said recently that we may not even have phones in five years. Instead, we will carry a small device that listens, anticipates, and creates — a personal AI agent that knows what we want to hear before we ask. It will make the music, the news, the podcasts, the stories. We already live in digital bubbles. Soon, those bubbles might become our own private worlds.

If an algorithm can write a hit country song about hardship and perseverance without a shred of actual experience, then the deeper question becomes unavoidable: If a machine can imitate the soul, then what is the soul?

What machines can never do

A machine can produce, and soon it may produce better than we can. It can calculate faster than any human mind. It can rearrange the notes and words of a thousand human songs into something that sounds real enough to fool millions.

But it cannot care. It cannot love. It cannot choose right and wrong. It cannot forgive because it cannot be hurt. It cannot stand between a child and danger. It cannot walk through sorrow.

A machine can imitate the sound of suffering. It cannot suffer.

The difference is the soul. The divine spark. The thing God breathed into man that no code will ever have. Only humans can take pain and let it grow into compassion. Only humans can take fear and turn it into courage. Only humans can rebuild their lives after losing everything. Only humans hear the whisper inside, the divine voice that says, “Live for something greater.”

We are building artificial minds. We are not building artificial life.

Questions that define us

And as these artificial minds grow sharper, as their tools become more convincing, the right response is not panic. It is to ask the oldest and most important questions.

Who am I? Why am I here? What is the meaning of freedom? What is worth defending? What is worth sacrificing for?

That answer is not found in a lab or a server rack. It is found in that mysterious place inside each of us where reason meets faith, where suffering becomes wisdom, where God reminds us we are more than flesh and more than thought. We are not accidents. We are not circuits. We are not replaceable.

RELATED: AI can fake a face — but not a soul

Seong Joon Cho/Bloomberg via Getty Images

Seong Joon Cho/Bloomberg via Getty Images

The miracle machines can never copy

Being human is not about what we can produce. Machines will outproduce us. That is not the question. Being human is about what we can choose. We can choose to love even when it costs us something. We can choose to sacrifice when it is not easy. We can choose to tell the truth when the world rewards lies. We can choose to stand when everyone else bows. We can create because something inside us will not rest until we do.

An AI content generator can borrow our melodies, echo our stories, and dress itself up like a human soul, but it cannot carry grief across a lifetime. It cannot forgive an enemy. It cannot experience wonder. It cannot look at a broken world and say, “I am going to build again.”

The age of machines is rising. And if we do not know who we are, we will shrink. But if we use this moment to remember what makes us human, it will help us to become better, because the one thing no algorithm will ever recreate is the miracle that we exist at all — the miracle of the human soul.

Want more from Glenn Beck? Get Glenn’s FREE email newsletter with his latest insights, top stories, show prep, and more delivered to your inbox.

1980s-inspired AI companion promises to watch and interrupt you: ‘You can see me? That’s so cool’

A tech entrepreneur is hoping casual AI users and businesses alike are looking for a new pal.

In this case, “PAL” is a floating term that can mean either a complimentary video companion or a replacement for a human customer service worker.

‘I love the print on your shirt; you’re looking sharp today.’

Tech company Tavus calls PALs “the first AI built to feel like real humans.”

Overall, Tavus’ messaging is seemingly directed toward both those seeking an artificial friend and those looking to streamline their workforce.

As a friend, the avatar will allegedly “reach out first” and contact the user by text or video call. It can allegedly anticipate “what matters” and step in “when you need them the most.”

In an X post, founder Hassaan Raza spoke about PALs being emotionally intelligent and capable of “understanding and perceiving.”

The AI bots are meant to “see, hear, reason,” and “look like us,” he wrote, further cementing the use of the technology as companion-worthy

“PALs can see us, understand our tone, emotion, and intent, and communicate in ways that feel more human,” Raza added.

In a promotional video for the product, the company showcased basic interactions between a user and the AI buddy.

RELATED: Mother admits she prefers AI over her DAUGHTER

A woman is shown greeting the “digital twin” of Raza, as he appears as a lifelike AI PAL on her laptop.

Raza’s AI responds, “Hey, Jessica. … I’m powered by the world’s fastest conversational AI. I can speak to you and see and hear you.”

Excited by the notion, Jessica responds, “Wait, you can see me? That’s so cool.”

The woman then immediately seeks superficial validation from the artificial person.

“What do you think of my new shirt?” she asks.

The AI lives up to the trope that chatbots are largely agreeable no matter the subject matter and says, “I love the print on your shirt; you’re looking sharp today.”

After the pleasantries are over, Raza’s AI goes into promo mode and boasts about its ability to use “rolling vision, voice detection, and interruptibility” to seem more lifelike for the user.

The video soon shifts to messaging about corporate integration meant to replace low-wage employees.

Describing the “digital twins” or AI agents, Raza explains that the AI program is an opportunity to monetize celebrity likeness or replace sales agents or customer support personnel. He claims the avatars could also be used in corporate training modules.

RELATED: Can these new fake pets save humanity? Take a wild guess

The interface of the future is human.

We’ve raised a $40M Series B from CRV, Scale, Sequoia, and YC to teach machines the art of being human, so that using a computer feels like talking to a friend or a coworker.

And today, I’m excited for y’all to meet the PALs: a new… pic.twitter.com/DUJkEu5X48

— Hassaan Raza (@hassaanrza) November 12, 2025

In his X post, Raza also attempted to flex his acting chops by creating a 200-second film about a man/PAL named Charlie who is trapped in a computer in the 1980s.

Raza revives the computer after it spent 40 years on the shelf, finding Charlie still trapped inside. In an attempt at comedy, Charlie asks Raza if flying cars or jetpacks exist yet. Raza responds, “We have Salesforce.”

The founder goes on to explain that PALs will “evolve” with the user, remembering preferences and needs. While these features are presented as groundbreaking, the PAL essentially amounts to being an AI face attached to an ongoing chatbot conversation.

AI users know that modern chatbots like Grok or ChatGPT are fully capable of remembering previous discussions and building upon what they have already learned. What’s seemingly new here is the AI being granted app permissions to contact the user and further infiltrate personal space.

Whether that annoys the user or is exactly what the person needs or wants is up for interpretation.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

When the AI bubble bursts, guess who pays?

For months, Silicon Valley insisted the artificial-intelligence boom wasn’t another government-fueled bubble. Now the same companies are begging Washington for “help” while pretending it isn’t a bailout.

Any technology that truly meets consumer demand doesn’t need taxpayer favors to survive and thrive — least of all trillion-dollar corporations. Yet the entire AI buildout depends on subsidies, tax breaks, and cheap credit. The push to cover America’s landscape with power-hungry data centers has never been viable in a free market. And the industry knows it.

The AI bubble isn’t about innovation — it’s about insulation. The same elites who inflated the market with easy money are now preparing to dump the risk on taxpayers.

Last week, OpenAI chief financial officer Sarah Friar let the truth slip. In a CNBC interview, she admitted the company needs a “backstop” — a government-supported guarantee — to secure the massive loans propping up its data-center empire.

“We’re looking for an ecosystem of banks, private equity, maybe even governmental … the ways governments can come to bear,” Friar said. When asked whether that meant a federal subsidy, she added, “The guarantee that allows the financing to happen … that can drop the cost of financing, increase the loan-to-value … an equity portion for some federal backstop. Exactly, and I think we’re seeing that. I think the U.S. government in particular has been incredibly forward-leaning.”

Translation: OpenAI’s debt-to-revenue ratio looks like a Ponzi scheme, and the government is already “forward-leaning” in keeping it afloat. Oracle — one of OpenAI’s key partners — carries a debt-to-equity ratio of 453%. Both companies want to privatize profits and socialize losses.

After public backlash, Friar tried to walk it back, claiming “backstop” was the wrong word. Then on LinkedIn, she used different words to describe the same thing: “American strength in technology will come from building real industrial capacity, which requires the private sector and government playing their part.”

When government “plays its part,” taxpayers pay the bill. Yet no one remembers the federal government “doing its part” for Apple or Motorola when the smartphone revolution took off — because those products sold just fine without subsidies.

The denials keep coming

OpenAI CEO Sam Altman quickly followed with a 1,500-word denial: “We do not have or want government guarantees for OpenAI datacenters.” Then he conceded they’re seeking loan guarantees for infrastructure — just not for software.

That distinction exposes the scam. Software revolutions scale cheaply. Data-center revolutions depend on state-sponsored power, water, and land. If this industry were self-sustaining, Trump wouldn’t need to tout Stargate — his administration’s marquee AI-infrastructure initiative — as a national project. Federal involvement is baked in, from subsidized energy to public land giveaways.

Altman’s own words confirm it. In an October interview with podcaster Tyler Cowen, released a day before his denial, Altman said, “When something gets sufficiently huge … the federal government is kind of the insurer of last resort.” He wasn’t talking about nuclear policy — he meant the financial side.

The coming crash

Anyone paying attention can see the rot. Nvidia, OpenAI, Oracle, and Meta are all entangled in a debt-driven accounting loop that would make Enron blush. This speculative bubble is inflating not because AI is transforming productivity, but because Wall Street and Washington are colluding to prop up stock prices and GDP growth.

When the crash comes — and it will — Washington will step in, exactly as it did with the banks in 2008 and the automakers in 2009. The “insurer of last resort” is already on standby.

The smoking gun

A leaked 11-page letter from OpenAI to the White House makes the scheme explicit. In the October 27 document addressed to the Office of Science and Technology Policy, Christopher Lehane, OpenAI’s chief global affairs officer, urged the government to provide “grants, cost-sharing agreements, loans, or loan guarantees” to help build America’s AI industrial base — all “to compete with China.”

Altman can tweet denials all he wants — his own company’s correspondence tells a different story. The pitch mirrors China’s state-capitalist model, except Beijing at least owns its industrial output. In America’s version, taxpayers absorb the risk while private firms pocket the reward.

RELATED: Stop feeding Big Tech and start feeding Americans again

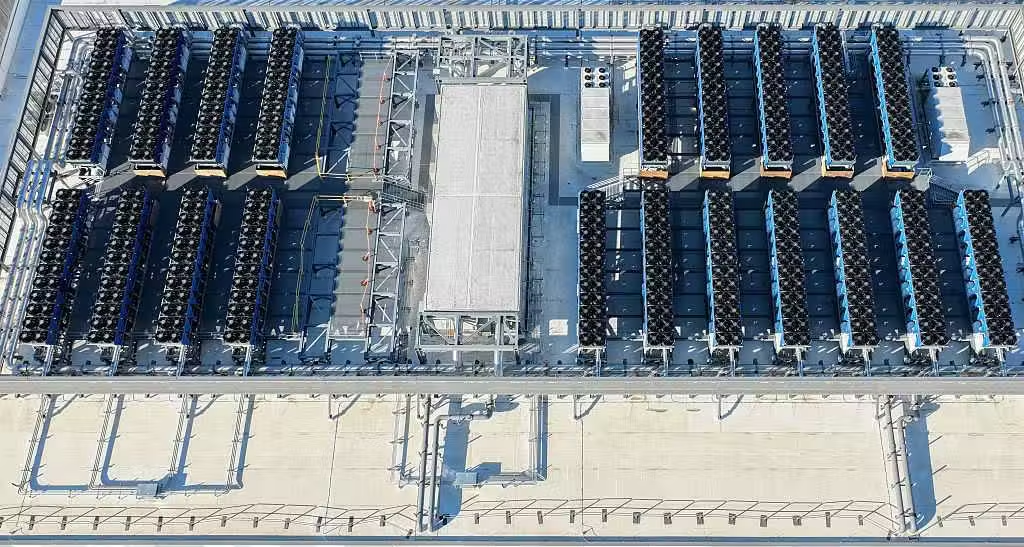

Credit: Photo by Mario Tama/Getty Images

Credit: Photo by Mario Tama/Getty Images

Meanwhile, the data-center race is driving up electricity and water costs nationwide. The United States is building roughly 10 times as many hyper-scale data centers as China — and footing the bill through inflated utility rates and public subsidies.

Privatized profits, socialized losses

When investor Brad Gerstner recently asked Altman how a company with $13 billion in revenue could possibly afford $1.4 trillion in commitments, Altman sneered, “Happy to find a buyer for your shares.” He can afford that arrogance because he knows who the buyer of last resort will be: the federal government.

The AI bubble isn’t about innovation — it’s about insulation. The same elites who inflated the market with easy money are now preparing to dump the risk on taxpayers.

And when the collapse comes, they’ll call it “national security.”

Trump tech czar slams OpenAI scheme for federal ‘backstop’ on spending — forcing Sam Altman to backtrack

OpenAI is under the spotlight after seemingly asking for the federal government to provide guarantees and loans for its investments.

Now, as the company is walking back its statements, a recent OpenAI letter has resurfaced that may prove it is talking in circles.

‘We’re always being brought in by the White House …’

The artificial intelligence company is predominantly known for its free and paid versions of ChatGPT. Microsoft is its key investor, with over $13 billion sunk into the company, holding a 27% stake.

The recent controversy stems from an interview OpenAI chief financial officer Sarah Friar gave to the Wall Street Journal. Friar said in the interview, published Wednesday, that OpenAI had goals of buying up the latest computer chips before its competition could, which would require sizeable investment.

“This is where we’re looking for an ecosystem of banks, private equity, maybe even governmental … the way governments can come to bear,” Friar said, per Tom’s Hardware.

Reporter Sarah Krouse asked for clarification on the topic, which is when Friar expressed interest in federal guarantees.

“First of all, the backstop, the guarantee that allows the financing to happen, that can really drop the cost of the financing but also increase the loan to value, so the amount of debt you can take on top of an equity portion for —” Friar continued, before Krouse interrupted, seeking clarification.

“[A] federal backstop for chip investment?”

“Exactly,” Friar said.

Krouse further bored in on the point when she asked if Friar has been speaking to the White House about how to “formalize” the “backstop.”

“We’re always being brought in by the White House, to give our point of view as an expert on what’s happening in the sector,” Friar replied.

After these remarks were publicized, OpenAI immediately backtracked.

RELATED: Stop feeding Big Tech and start feeding Americans again

On Wednesday night, Friar posted on LinkedIn that “OpenAI is not seeking a government backstop” for its investments.

“I used the word ‘backstop’ and it muddied the point,” she continued. She went on to claim that the full clip showcased her point that “American strength in technology will come from building real industrial capacity which requires the private sector and government playing their part.”

On Thursday morning, David Sacks, President Trump’s special adviser on crypto and AI, stepped in to crush any of OpenAI’s hopes of government guarantees, even if they were only alleged.

“There will be no federal bailout for AI,” Sacks wrote on X. “The U.S. has at least 5 major frontier model companies. If one fails, others will take its place.”

Sacks added that the White House does want to make power generation easier for AI companies, but without increasing residential electricity rates.

“Finally, to give benefit of the doubt, I don’t think anyone was actually asking for a bailout. (That would be ridiculous.) But company executives can clarify their own comments,” he concluded.

The saga was far from over, though, as OpenAI CEO Sam Altman seemingly dug the hole even deeper.

RELATED: Artificial intelligence is not your friend

By Thursday afternoon, Altman had released a lengthy statement starting with his rejection of the idea of government guarantees.

“We do not have or want government guarantees for OpenAI datacenters. We believe that governments should not pick winners or losers, and that taxpayers should not bail out companies that make bad business decisions or otherwise lose in the market. If one company fails, other companies will do good work,” he wrote on X.

He went on to explain that it was an “unequivocal no” that the company should be bailed out. “If we screw up and can’t fix it, we should fail.”

It wasn’t long before the online community started claiming that OpenAI was indeed asking for government help as recently as a week prior.

As originally noted by the X account hilariously titled “@IamGingerTrash,” OpenAI has a letter posted on its own website that seems to directly ask for government guarantees. However, as Sacks noted, it does seem to relate to powering servers and providing electrical capacity.

Dated October 27, 2025, the letter was directed to the U.S. Office of Science and Technology Policy from OpenAI Chief Global Affairs Officer Christopher Lehane. It asked the OSTP to “double down” and work with Congress to “further extend eligibility to the semiconductor manufacturing supply chain; grid components like transformers and specialized steel for their production; AI server production; and AI data centers.”

The letter then said, “To provide manufacturers with the certainty and capital they need to scale production quickly, the federal government should also deploy grants, cost-sharing agreements, loans, or loan guarantees to expand industrial base capacity and resilience.”

Altman has yet to address the letter.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Artificial intelligence is not your friend

Half of Americans say they are lonely and isolated — and artificial intelligence is stepping into the void.

Sam Altman recently announced that OpenAI will soon provide erotica for lonely adults. Mark Zuckerberg envisions a future in which solitary people enjoy AI friends. According to the Harvard Business Review, the top uses for large language models are therapy and companionship.

Lonely people don’t need better algorithms. We need better friends — and the courage to be one.

It’s easy to see why this is happening. AI is always available, endlessly patient, and unfailingly agreeable. Millions now pour their secrets into silicon confidants, comforted by algorithms that respond with affirmation and tact.

But what masquerades as friendship is, in fact, a dangerous substitute. AI therapy and friendship burrow us deeper into ourselves when what we most need is to reach out to others.

As Jordan Peterson once observed, “Obsessive concern with the self is indistinguishable from misery.” That is the trap of AI companionship.

Hall of mirrors

AI echoes back your concerns, frames its answers around your cues, and never asks anything of you. At times, it may surprise you with information, but the conversation still runs along tracks you have laid. In that sense, every exchange with AI is solipsistic — a hall of mirrors that flatters the self but never challenges it.

It can’t grow with you to become more generous, honorable, just, or patient. Ultimately, every interaction with AI cultivates a narrow self-centeredness that only increases loneliness and unhappiness.

Even when self-reflection is necessary, AI falls short. It cannot read your emotions, adjust its tone, or provide physical comfort. It can’t inspire courage, sit beside you in silence, or offer forgiveness. A chatbot can only mimic what it has never known.

Most damaging of all, it can’t truly empathize. No matter what words it generates, it has never suffered loss, borne responsibility, or accepted love. Deep down, you know it doesn’t really understand you.

With AI, you can talk all you want. But you will never be heard.

Humans need love, not algorithms

Humans are social animals. We long for love and recognition from other humans. The desire for friendship is natural. But people are looking where no real friend can be found.

Aristotle taught that genuine friendship is ordered toward a common good and requires presence, sacrifice, and accountability. Unlike friendships of utility or pleasure — which dissolve when benefit or amusement fades — true friendship endures, because it calls each person to become better than they are.

Today, the word “friend” is often cheapened to a mere social-media connection, making Aristotelian friendship — rooted in virtue and sacrifice — feel almost foreign. Yet it comes alive in ancient texts, which show the heights that true friendship can inspire.

Real friendships are rooted in ideals older than machines and formed through shared struggles and selfless giving.

In Homer’s “Iliad,” Achilles and Patroclus shared an unbreakable bond forged in childhood and through battle. When Patroclus was killed, Achilles’ rage and grief changed the course of the Trojan War and of history. The Bible describes the friendship of Jonathan and David, whose devotion to one another, to their people, and to God transcended ambition and even family ties: “The soul of Jonathan was knit with the soul of David.”

These friendships were not one-sided projections. They were built upon shared experiences and selflessness that artificial intelligence can never offer.

Each time we choose the easy route of AI companionship over the hard reality of human relationships, we render ourselves less available and less able to achieve the true friendship our ancestors enjoyed.

Recovering genuine friendship requires forming people who are capable of being friends. People must be taught how to speak, listen, and seek truth together — something our current educational system has largely forgotten.

Classical education offers a remedy, reviving these habits of human connection by immersing students in the great moral and philosophical conversations of the past. Unlike modern classrooms, where students passively absorb information, classical seminars require them to wrestle together over what matters most: love in Plato’s “Symposium,” restlessness in Augustine’s “Confessions,” loss in Virgil’s “Aeneid,” or reconciliation in Shakespeare’s “King Lear.”

These dialogues force students to listen carefully, speak honestly, and allow truth — not ego — to guide the exchange. They remind us that friendship is not built on convenience but on mutual searching, where each participant must give as well as receive.

Reclaiming humanity

In a world tempted by the frictionless ease of talking to machines, classical education restores human encounters. Seminars cultivate the courage to confront discomfort, admit error, and grapple with ideas that challenge our assumptions — a rehearsal for the moral and social demands of real friendship.

RELATED: MIT professor’s 4 critical steps to stop AI from hijacking humanity

Photo by Yuichiro Chino via Getty Images

Photo by Yuichiro Chino via Getty Images

Is classroom practice enough for friendship? No. But it plants the seeds. Habits of conversation, humility, and shared pursuit of truth prepare students to form real friendships through self-sacrifice outside the classroom: to cook for an exhausted co-worker, to answer the late-night call for help, to lovingly tell another he or she is wrong, to simply be present while someone grieves.

It’s difficult to form friendships in the modern world, where people are isolated in their homes, occupied by screens, and vexed by distractions and schedules. Technology tempts us with the illusion of effortless companionship — someone who is always where you are, whenever you want to talk. Like all fantasies, it can be pleasant for a time. But it’s not real.

Real friendships are rooted in ideals older than machines and formed through shared struggles and selfless giving.

Lonely people don’t need better algorithms. We need better friends — and the courage to be one.

Editor’s note: This article was published originally in the American Mind.

Liberals, heavy porn users more open to having an AI friend, new study shows

A small but significant percentage of Americans say they are open to having a friendship with artificial intelligence, while some are even open to romance with AI.

The figures come from a new study by the Institute for Family Studies and YouGov, which surveyed American adults under 40 years old. Their data revealed that while very few young Americans are already friends with some sort of AI, about 10 times that amount are open to it.

‘It signals how loneliness and weakened human connection are driving some young adults.’

Just 1% of Americans under 40 who were surveyed said they were already friends with an AI. However, a staggering 10% said they are open to the idea. With 2,000 participants surveyed, that’s 200 people who said they might be friends with a computer program.

Liberals said they were more open to the idea of befriending AI (or are already in such a friendship) than conservatives were, to the tune of 14% of liberals vs. 9% of conservatives.

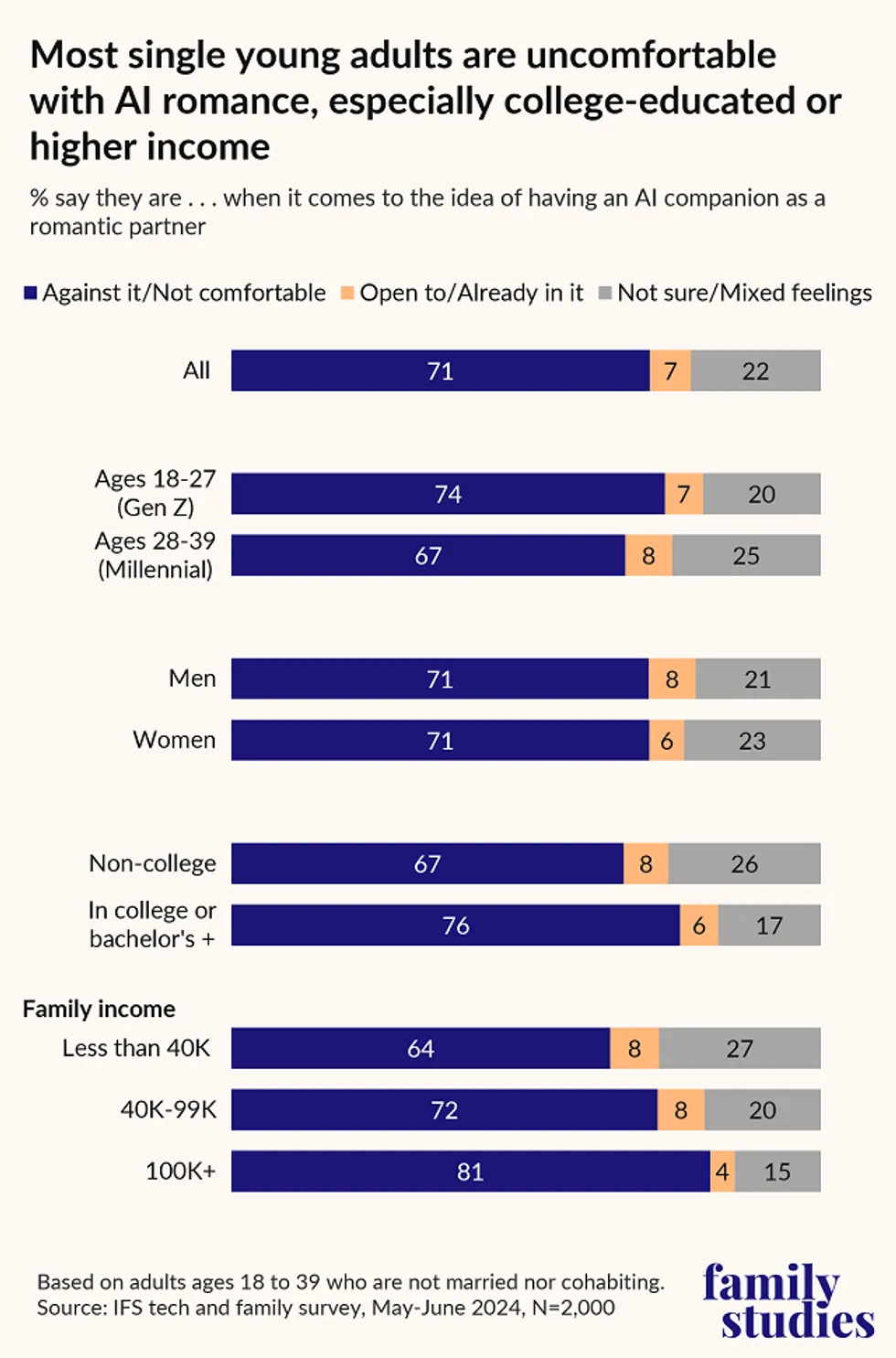

The idea of being in a “romantic” relationship with AI, not just a friendship, again produced some troubling — or scientifically relevant — responses.

When it comes to young adults who are not married or “cohabitating,” 7% said they are open to the idea of being in a romantic partnership with AI.

At the same time, a larger percentage of young adults think that AI has the potential to replace real-life romantic relationships; that number sits at a whopping 25%, or 500 respondents.

There exists a large crossover with frequent pornography users, as the more frequently one says they consume online porn, the more likely they are to be open to having an AI as a romantic partner, or are already in such a relationship.

Only 5% of those who said they never consume porn, or do so “a few times a year,” said they were open to an AI romantic partner.

That number goes up to 9% for those who watch porn between once or twice a month and several times per week. For those who watch online porn daily, the number was 11%.

Overall, young adults who are heavy porn users were the group most open to having an AI girlfriend or boyfriend, in addition to being the most open to an AI friendship.

RELATED: The laws freaked-out AI founders want won’t save us from tech slavery if we reject Christ’s message

Graphic courtesy Institute for Family Studies

Graphic courtesy Institute for Family Studies

“Roughly one in 10 young Americans say they’re open to an AI friendship — but that should concern us,” Dr. Wendy Wang of the Institute for Family Studies told Blaze News.

“It signals how loneliness and weakened human connection are driving some young adults to seek emotional comfort from machines rather than people,” she added.

Another interesting statistic to take home from the survey was the fact that young women were more likely than men to perceive AI as a threat in general, with 28% agreeing with the idea vs. 23% of men. Women are also less excited about AI’s effect on society; just 11% of women were excited vs. 20% of men.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

How the Classical Education Movement Is Rescuing a Lost Generation

Last September, professors at elite American colleges finally began to admit what has been apparent for the last dozen years:…

A new study hints what happens when superintelligence gets brain rot — just like us

AI and LLMs appear to be in a bit of a slump, with the latest revelatory scandal coming out of a major study showing that large language models, the closest we’ve come yet to so-called artificial general intelligence, are degraded in their capacities when they are subjected to lo-fi, low-quality, and “junk” content.

The study, from a triad of college computer science departments including University of Texas, set out to determine relationships between data quality and performance in LLMs. The scientists trained their LLMs on viral X.com/Twitter data, emphasizing high-engagement posts, and observed more than 20% reduction in reasoning capacity, 30% falloffs in contextual memory tasks, and — perhaps most ominously, since the study tested for measurable personality traits like agreeableness, extraversion, etc.— the scientists saw a leap in output that can technically be characterized as narcissistic and psychopathic.

Sound familiar?

The paper analogizes the function of the LLM performance with human cognitive performance and refers to this degradation in both humans and LLMs as “brain rot,” a “shorthand for how endless, low-effort, engagement-bait content can dull human cognition — eroding focus, memory discipline, and social judgment through compulsive online consumption.”

The whole project reeks of hubris, reeks of avarice and power.

There is no great or agreed-upon utility in cognition-driven analogies made between human and computer performance. The temptation persists for computer scientists and builders to read in too much, making categorical errors with respect to cognitive capacities, definitions of intelligence, and so forth. The temptation is to imagine that our creative capacities ‘out there’ are somehow reliable mirrors of the totality of our beings ‘in here,’ within our experience as humans.

We’ve seen something similar this year with the prevalence of so-called LLM psychosis, which — in yet another example of confusing terminology applied to already confused problems — seeks to describe neither psychosis embedded into LLMs nor that measured in their “behavior,” but rather the severe mental illness reported by many people after applying themselves, their attention, and their belief into computer-contained AI “personages” such as Claude or Grok. Why do they need names anyways? LLM 12-V1, for example, would be fine …

The “brain rot” study rather proves, if anything, that the project of creating AI is getting a little discombobulated within the metaphysical hall of mirrors its creators, backers, and believers have, so far, barged their way into, heedless of old-school measures like maps, armor, transport, a genuine plan. The whole project reeks of hubris, reeks of avarice and power. Yet, on the other hand, the inevitability of the integration of AI into society, into the project of terraforming the living earth, isn’t really being approached by a politically, or even financially, authoritative and responsible body — one which might perform the machine-yoking, human-compassion measures required if we’re to imagine ourselves marching together into and through that hall of mirrors to a hyper-advanced, technologically stable, and human-populated civilization.

RELATED: Intelligence agency funding research to merge AI with human brain cells

Photo by VCG / Contributor via Getty Images

Photo by VCG / Contributor via Getty Images

So, when it’s observed here that AI seems to be in a bit of a slump — perhaps even a feedback loop of idiocy, greed, and uncertainty coupled, literally wired-in now, with the immediate survival demands of the human species — it’s not a thing we just ignore. A signal suggesting as much erupted last week from a broad coalition of high-profile media, business, faith, and arts voices brought under the aegis of the Statement on Superintelligence, which called for “a prohibition on the development of superintelligence, not lifted before there is 1. broad scientific consensus that it will be done safely and controllably, and 2. strong public buy-in.”

There’s a balance, there are competing interests, and we’re all still living under a veil of commercial and mediated fifth-generation warfare. There’s a sort of adults-in-the-room quality we are desperately lacking at the moment. But the way the generational influences lay on the timeline isn’t helping. With boomers largely tech-illiterate but still hanging on, with Xers tech-literate but stuck in the middle (as ever), with huge populations of highly tech-saturated Millennials, Zoomers, and so-called generation Alpha waiting for their promised piece of the social contract, the friction heat is gathering. We would do well to recognize the stakes and thus honor the input of those future humans who shouldn’t have to be born into or navigate a hall of mirrors their predecessors failed to escape.

search

categories

Archives

navigation

Recent posts

- Former New Jersey Governor Who Took Over For Scandal-Plagued Predecessor Dies January 11, 2026

- Philadelphia Sheriff Goes Viral For Threatening ICE January 11, 2026

- The Obamacare subsidy fight exposes who Washington really serves January 11, 2026

- The crisis of ‘trembling pastors’: Why church leaders are ignoring core theology because it’s ‘political’ January 11, 2026

- Dobol B TV Livestream: January 12, 2026 January 11, 2026

- Ogie Diaz ukol kay Liza Soberano: ‘Wala siyang sama ng loob sa akin. Ako rin naman…’ January 11, 2026

- LOOK: Another ‘uson” descends Mayon Volcano January 11, 2026