Category: Artificial intelligence

Karp’s Quest to Save the Shire

“You’re killing my family in Palestine!” a protester screamed at Palantir CEO Alex Karp while he was addressing a Silicon Valley conference last April. “The primary source of death in Palestine,” Karp, the Jewish, half-black, progressive, tai chi practitioner shot back, without missing a beat, “is the fact that Hamas has realized there are millions and millions of useful idiots.”

The post Karp’s Quest to Save the Shire appeared first on .

Ai • Artificial intelligence • Blaze Media • Chatbot • Google • Return

Google boss compares replacing humans with AI to getting a fridge for the first time

The head of Google’s parent company says welcoming artificial intelligence into daily life is akin to buying a refrigerator.

Alphabet’s chief executive, Indian-born Sundar Pichai, gave a revealing interview to the BBC this week in which he asked the general population to get on board with automation through AI.

‘Our first refrigerator …. radically changed my mom’s life.’

The BBC’s Faisal Islam, whose parents are from India, asked the Indian-American executive if the purpose of his AI products were to automate human tasks and essentially replace jobs with programming.

Pichai claimed that AI should be welcomed because humans are “overloaded” and “juggling many things.”

He then compared using AI to welcoming the technology that a dishwasher or fridge once brought to the average home.

“I remember growing up, you know, when we got our first refrigerator in the home — how much it radically changed my mom’s life, right? And so you can view this as automating some, but you know, freed her up to do other things, right?”

Islam fired back, citing the common complaints heard from the middle class who are concerned with job loss in fields like creative design, accounting, and even “journalism too.”

“Do you know which jobs are going to be safer?” he posited to Pichai.

RELATED: Here’s how to get the most annoying new update off of your iPhone

The Alphabet chief was steadfast in his touting of AI’s “extraordinary benefits” that will “create new opportunities.”

At the same time, he said the general population will “have to work through societal disruptions” as certain jobs “evolve” and transition.

“People need to adapt,” he continued. “Then there would be areas where it will impact some jobs, so society — I mean, we need to be having those conversations. And part of it is, how do you develop this technology responsibly and give society time to adapt as we absorb these technologies?”

Despite branding Google Gemini as a force for good that should be embraced, Pichai strangely admitted at the same time that chatbots are not foolproof by any means.

RELATED: ‘You’re robbing me’: Morgan Freeman slams Tilly Norwood, AI voice clones

– YouTube

“This is why people also use Google search,” Pichai said in regard to AI’s proclivity to present inaccurate information. “We have other products that are more grounded in providing accurate information.”

The 53-year-old told the BBC that it was up to the user to learn how to use AI tools for “what they’re good at” and not “blindly trust everything they say.”

The answer seems at odds with the wonder of AI he championed throughout the interview, especially when considering his additional commentary about the technology being prone to mistakes.

“We take pride in the amount of work we put in to give us as accurate information as possible, but the current state-of-the-art AI technology is prone to some errors.”

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Ai • Ai actor • Artificial intelligence • Blaze Media • Return • Voice over

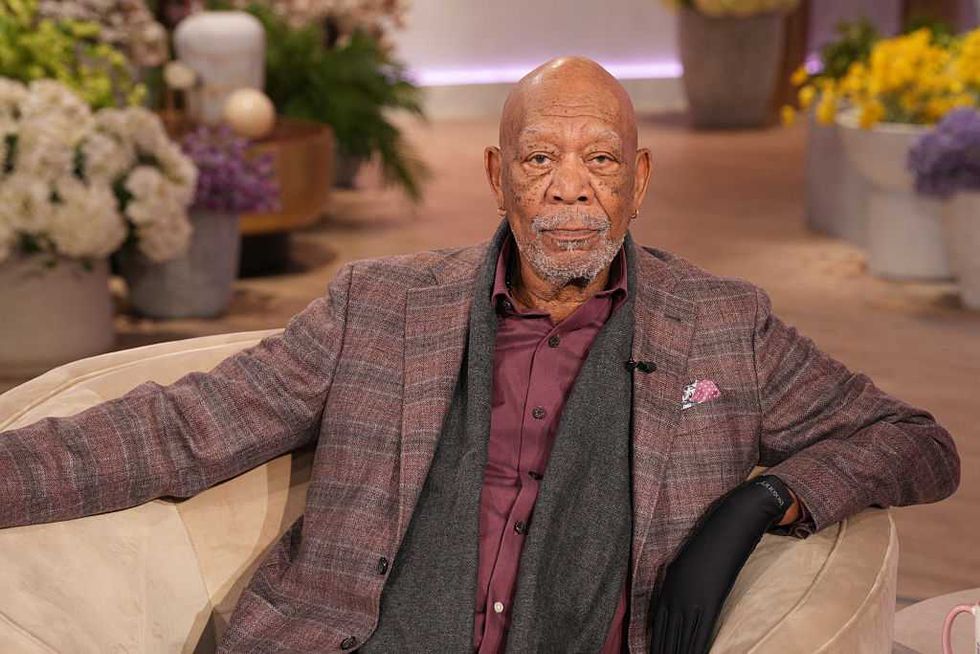

‘You’re robbing me’: Morgan Freeman slams Tilly Norwood, AI voice clones

The use of celebrity likeness for AI videos is spiraling out of control, and one of Hollywood’s biggest stars is not having it.

Despite the use of AI in online videos being fairly new, it has already become a trope to use an artificial version of a celebrity’s voice for content relating to news, violence, or history.

‘I don’t appreciate it, and I get paid for doing stuff like that.’

This is particularly true when it comes to satirical videos that are meant to sound like documentaries. Creators love to use recognizable voices, like David Attenborough’s and, of course, Morgan Freeman’s, whose voice has become so recognizable that others have labeled him as “the voice of God.”

However, the 88-year-old Freeman is not pleased about his voice being replicated. In an interview with the Guardian, he said that while some actors like James Earl Jones (who played Darth Vader) have consented to his voice being imitated with computers, he has not.

“I’m a little PO’d, you know,” Freeman told the outlet. “I’m like any other actor: Don’t mimic me with falseness. I don’t appreciate it, and I get paid for doing stuff like that, so if you’re gonna do it without me, you’re robbing me.”

Freeman explained that his lawyers have been “very, very busy” in pursuing “many … quite a few” cases in which his voice was replicated without his consent.

In the same interview, the Memphis native was also not shy about criticizing the concept of AI actors.

RELATED: Hollywood’s newest star isn’t human — and why that’s ‘disturbing’

Photo by Chris Haston/WBTV via Getty Images

Photo by Chris Haston/WBTV via Getty Images

Freeman was asked about Tilly Norwood, the AI character introduced by Dutch actress Eline Van der Velden in 2025. The pretend-world character is meant to be an avatar mimicking celebrity status, while also cutting costs in the casting room.

“Nobody likes her because she’s not real and that takes the part of a real person,” Freeman jabbed. “So it’s not going to work out very well in the movies or in television. … The union’s job is to keep actors acting, so there’s going to be that conflict.”

Freeman spoke out about the use of his voice in 2024, as well. According to a report by 4 News Now, a TikTok creator posted a video claiming to be Freeman’s niece and used an artificial version of his voice to narrate the video.

In response, Freeman wrote on X, “Thank you to my incredible fans for your vigilance and support in calling out the unauthorized use of an A.I. voice imitating me.”

He added, “Your dedication helps authenticity and integrity remain paramount. Grateful.”

RELATED: Meet AI ‘actress’ Tilly Norwood. Is she really the future of entertainment?

Norwood is not the first attempt at taking an avatar mainstream. In 2022, Capitol Records flirted with an AI rapper named FN Meka; the very idea that the rapper was even signed to a label was historic in the first place.

The rapper, or more likely its representatives, were later dropped from the label after activists claimed the character reinforced racial stereotypes.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Middle school boy faces 10 felonies in AI nude scandal. But expulsion of girl, 13 — an alleged victim — sparks firestorm.

A Louisiana middle school boy is facing 10 felony counts for using AI to create fake nude photos of female classmates and sharing them with other students, according to multiple reports. However, one alleged female victim has been expelled following her reported reaction to the scandal.

On Aug. 26, detectives with the Lafourche Parish Sheriff’s Office launched an investigation into reports that male students had shared fake nude photos of female classmates at the Sixth Ward Middle School in Choctaw.

‘What’s going on here, I’ll be quite frank, is nothing more than disgusting.’

Benjamin Comeaux, an attorney representing the alleged female victim, said the images used real photos of the girls, including selfies, with AI-generated nude bodies, the Washington Post reported.

Comeaux said administrators reported the incident to the school resource officer, according to the Post.

The Lafourche Parish Sheriff’s Office said in a statement that the incident “led to an altercation on a school bus involving one of the male students and one of the female students.”

Comeaux said during a bus ride, several boys shared AI-made nude images of a 13-year-old girl, and the girl in question struck one of the students sharing the images, the Post reported.

However, school administrators expelled the 13-year-old girl over the physical altercation.

Meanwhile, police said that a male suspect on Sept. 15 was charged with 10 counts of unlawful dissemination of images created by artificial intelligence.

The sheriff’s office noted that the investigation is ongoing, and there is a possibility of additional arrests and charges.

Sheriff Craig Webre noted that the female student involved in the alleged bus fight will not face criminal charges “given the totality of the circumstances.”

Webre added that the investigation involves technology and social media platforms, which could take several weeks and even months to “attain and investigate digital evidence.”

The alarming incident was brought back to life during a fiery Nov. 5 school board meeting during which attorneys for the expelled female student slammed school administrators.

According to WWL-TV, an attorney said, “She had enough, what is she supposed to do?”

“She reported it to the people who are supposed to protect her, but she was victimized, and finally she tried to knock the phone out of his hand and swat at him,” the same attorney added.

One attorney also noted, “This was not a random act of violence … this was a reasonable response to what this kid endured, and there were so many options less than expulsion that could’ve been done. Had she not been a victim, we’re not here, and none of this happens.”

Her representatives also warned, “You are setting a dangerous precedent by doing anything other than putting her back in school,” according to WWL.

Matthew Ory, one of the attorneys representing the female student, declared, “What’s going on here, I’ll be quite frank, is nothing more than disgusting. Her image was taken by artificial intelligence and manipulated and manufactured to be child pornography.”

School board member Valerie Bourgeois pushed back by saying, “Yes, she is a victim, I agree with that, but if she had not hit the young man, we wouldn’t be here today, it wouldn’t have come to an expulsion hearing.”

Tina Babin, another school board member, added, “I found the video on the bus to be sickening, the whole thing, everything about it, but the fact that this child went through this all day long does weigh heavy on me.”

Lafourche Parish Public Schools Superintendent Jarod Martin explained, “Sometimes in life, we can be both victims and perpetrators. Sometimes in life, horrible things happen to us, and we get angry and do things.”

Ultimately, the school board allowed the girl to return to school, but she will be on probation until January.

Attorneys for the girl’s family, Greg Miller and Morgyn Young, told WWL that they intend to file a lawsuit.

“Nobody took any action to confiscate cell phones, to put an end to this,” Miller claimed. “It’s pure negligence on the part of the school board.”

Martin defended the district in a statement that read:

Any and all allegations of criminal misconduct on our campuses are immediately reported to the Lafourche Parish Sheriff’s Office. After reviewing this case, the evidence suggests that the school did, in fact, follow all of our protocols and procedures for reporting such instances.

Sheriff Webre warned, “While the ability to alter images has been available for decades, the rise of AI has made it easier for anyone to alter or create such images with little to no training or experience.”

Webre also said, “This incident highlights a serious concern that all parents should address with their children.”

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

1980s-inspired AI companion promises to watch and interrupt you: ‘You can see me? That’s so cool’

A tech entrepreneur is hoping casual AI users and businesses alike are looking for a new pal.

In this case, “PAL” is a floating term that can mean either a complimentary video companion or a replacement for a human customer service worker.

‘I love the print on your shirt; you’re looking sharp today.’

Tech company Tavus calls PALs “the first AI built to feel like real humans.”

Overall, Tavus’ messaging is seemingly directed toward both those seeking an artificial friend and those looking to streamline their workforce.

As a friend, the avatar will allegedly “reach out first” and contact the user by text or video call. It can allegedly anticipate “what matters” and step in “when you need them the most.”

In an X post, founder Hassaan Raza spoke about PALs being emotionally intelligent and capable of “understanding and perceiving.”

The AI bots are meant to “see, hear, reason,” and “look like us,” he wrote, further cementing the use of the technology as companion-worthy

“PALs can see us, understand our tone, emotion, and intent, and communicate in ways that feel more human,” Raza added.

In a promotional video for the product, the company showcased basic interactions between a user and the AI buddy.

RELATED: Mother admits she prefers AI over her DAUGHTER

A woman is shown greeting the “digital twin” of Raza, as he appears as a lifelike AI PAL on her laptop.

Raza’s AI responds, “Hey, Jessica. … I’m powered by the world’s fastest conversational AI. I can speak to you and see and hear you.”

Excited by the notion, Jessica responds, “Wait, you can see me? That’s so cool.”

The woman then immediately seeks superficial validation from the artificial person.

“What do you think of my new shirt?” she asks.

The AI lives up to the trope that chatbots are largely agreeable no matter the subject matter and says, “I love the print on your shirt; you’re looking sharp today.”

After the pleasantries are over, Raza’s AI goes into promo mode and boasts about its ability to use “rolling vision, voice detection, and interruptibility” to seem more lifelike for the user.

The video soon shifts to messaging about corporate integration meant to replace low-wage employees.

Describing the “digital twins” or AI agents, Raza explains that the AI program is an opportunity to monetize celebrity likeness or replace sales agents or customer support personnel. He claims the avatars could also be used in corporate training modules.

RELATED: Can these new fake pets save humanity? Take a wild guess

The interface of the future is human.

We’ve raised a $40M Series B from CRV, Scale, Sequoia, and YC to teach machines the art of being human, so that using a computer feels like talking to a friend or a coworker.

And today, I’m excited for y’all to meet the PALs: a new… pic.twitter.com/DUJkEu5X48

— Hassaan Raza (@hassaanrza) November 12, 2025

In his X post, Raza also attempted to flex his acting chops by creating a 200-second film about a man/PAL named Charlie who is trapped in a computer in the 1980s.

Raza revives the computer after it spent 40 years on the shelf, finding Charlie still trapped inside. In an attempt at comedy, Charlie asks Raza if flying cars or jetpacks exist yet. Raza responds, “We have Salesforce.”

The founder goes on to explain that PALs will “evolve” with the user, remembering preferences and needs. While these features are presented as groundbreaking, the PAL essentially amounts to being an AI face attached to an ongoing chatbot conversation.

AI users know that modern chatbots like Grok or ChatGPT are fully capable of remembering previous discussions and building upon what they have already learned. What’s seemingly new here is the AI being granted app permissions to contact the user and further infiltrate personal space.

Whether that annoys the user or is exactly what the person needs or wants is up for interpretation.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Ai • Artificial intelligence • Blaze Media • Return • Trump • White House

Trump tech czar slams OpenAI scheme for federal ‘backstop’ on spending — forcing Sam Altman to backtrack

OpenAI is under the spotlight after seemingly asking for the federal government to provide guarantees and loans for its investments.

Now, as the company is walking back its statements, a recent OpenAI letter has resurfaced that may prove it is talking in circles.

‘We’re always being brought in by the White House …’

The artificial intelligence company is predominantly known for its free and paid versions of ChatGPT. Microsoft is its key investor, with over $13 billion sunk into the company, holding a 27% stake.

The recent controversy stems from an interview OpenAI chief financial officer Sarah Friar gave to the Wall Street Journal. Friar said in the interview, published Wednesday, that OpenAI had goals of buying up the latest computer chips before its competition could, which would require sizeable investment.

“This is where we’re looking for an ecosystem of banks, private equity, maybe even governmental … the way governments can come to bear,” Friar said, per Tom’s Hardware.

Reporter Sarah Krouse asked for clarification on the topic, which is when Friar expressed interest in federal guarantees.

“First of all, the backstop, the guarantee that allows the financing to happen, that can really drop the cost of the financing but also increase the loan to value, so the amount of debt you can take on top of an equity portion for —” Friar continued, before Krouse interrupted, seeking clarification.

“[A] federal backstop for chip investment?”

“Exactly,” Friar said.

Krouse further bored in on the point when she asked if Friar has been speaking to the White House about how to “formalize” the “backstop.”

“We’re always being brought in by the White House, to give our point of view as an expert on what’s happening in the sector,” Friar replied.

After these remarks were publicized, OpenAI immediately backtracked.

RELATED: Stop feeding Big Tech and start feeding Americans again

On Wednesday night, Friar posted on LinkedIn that “OpenAI is not seeking a government backstop” for its investments.

“I used the word ‘backstop’ and it muddied the point,” she continued. She went on to claim that the full clip showcased her point that “American strength in technology will come from building real industrial capacity which requires the private sector and government playing their part.”

On Thursday morning, David Sacks, President Trump’s special adviser on crypto and AI, stepped in to crush any of OpenAI’s hopes of government guarantees, even if they were only alleged.

“There will be no federal bailout for AI,” Sacks wrote on X. “The U.S. has at least 5 major frontier model companies. If one fails, others will take its place.”

Sacks added that the White House does want to make power generation easier for AI companies, but without increasing residential electricity rates.

“Finally, to give benefit of the doubt, I don’t think anyone was actually asking for a bailout. (That would be ridiculous.) But company executives can clarify their own comments,” he concluded.

The saga was far from over, though, as OpenAI CEO Sam Altman seemingly dug the hole even deeper.

RELATED: Artificial intelligence is not your friend

By Thursday afternoon, Altman had released a lengthy statement starting with his rejection of the idea of government guarantees.

“We do not have or want government guarantees for OpenAI datacenters. We believe that governments should not pick winners or losers, and that taxpayers should not bail out companies that make bad business decisions or otherwise lose in the market. If one company fails, other companies will do good work,” he wrote on X.

He went on to explain that it was an “unequivocal no” that the company should be bailed out. “If we screw up and can’t fix it, we should fail.”

It wasn’t long before the online community started claiming that OpenAI was indeed asking for government help as recently as a week prior.

As originally noted by the X account hilariously titled “@IamGingerTrash,” OpenAI has a letter posted on its own website that seems to directly ask for government guarantees. However, as Sacks noted, it does seem to relate to powering servers and providing electrical capacity.

Dated October 27, 2025, the letter was directed to the U.S. Office of Science and Technology Policy from OpenAI Chief Global Affairs Officer Christopher Lehane. It asked the OSTP to “double down” and work with Congress to “further extend eligibility to the semiconductor manufacturing supply chain; grid components like transformers and specialized steel for their production; AI server production; and AI data centers.”

The letter then said, “To provide manufacturers with the certainty and capital they need to scale production quickly, the federal government should also deploy grants, cost-sharing agreements, loans, or loan guarantees to expand industrial base capacity and resilience.”

Altman has yet to address the letter.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Stop feeding Big Tech and start feeding Americans again

America needs more farmers, ranchers, and private landholders — not more data centers and chatbots. Yet the federal government is now prioritizing artificial intelligence over agriculture, offering vast tracts of public land to Big Tech while family farms and ranches vanish and grocery bills soar.

Conservatives have long warned that excessive federal land ownership, especially in the West, threatens liberty and prosperity. The Trump administration shares that concern but has taken a wrong turn by fast-tracking AI infrastructure on government property.

If the nation needs a new Manhattan Project, it should be for food security, not AI slop.

Instead of devolving control to the states or private citizens, it’s empowering an industry that already consumes massive resources and delivers little tangible value to ordinary Americans. And this is on top of Interior Secretary Doug Burgum’s execrable plan to build 15-minute cities and “affordable housing.”

In July, President Trump signed an executive order titled Accelerating Federal Permitting of Data Center Infrastructure as part of its AI Action Plan. The order streamlines permits, grants financial incentives, and opens federal properties — from Superfund sites to military bases — to AI-related development. The Department of Energy quickly identified four initial sites: Oak Ridge Reservation in Tennessee, Idaho National Laboratory, the Paducah Gaseous Diffusion Plant in Kentucky, and the Savannah River Site in South Carolina.

Last month, the list expanded to include five Air Force bases — Arnold (Tennessee), Davis-Monthan (Arizona), Edwards (California), Joint Base McGuire-Dix-Lakehurst (New Jersey), and Robins (Georgia) — totaling over 3,000 acres for lease to private developers at fair market value.

Locating AI facilities on military property is preferable to disrupting residential or agricultural communities, but the favoritism shown to Big Tech raises an obvious question: Is this the best use of public land? And will anchoring these bubble companies on federal property make them “too big to fail,” just like the banks and mortgage lenders before the 2008 crash?

President Trump has acknowledged the shortage of affordable meat as a national crisis. If any industry deserves federal support, it’s America’s independent farmers and ranchers. Yet while Washington clears land for billion-dollar data centers, small producers are disappearing. In the past five years, the U.S. has lost roughly 141,000 family farms and 150,000 cattle operations. The national cattle herd is at its lowest level since 1951. Since 1982, America has lost more than half a million farms — nearly a quarter of its total.

Multiple pressures — rising input costs, droughts, and inflation — have crippled family farms that can’t compete with corporate conglomerates. But federal land policy also plays a role. The government’s stranglehold on Western lands limits grazing rights, water access, and expansion opportunities. If Washington suddenly wants to sell or lease public land, why not prioritize ranchers who need it for feed and forage?

The Conservation Reserve Program compounds the problem. The 2018 Farm Bill extension locked up to 30 million acres of land — five million in Wyoming and Montana alone — under the guise of conservation. Wealthy absentee owners exploit the program by briefly “farming” land to qualify it as cropland, then retiring it into CRP to collect taxpayer payments. More than half of CRP acreage is owned by non-farmers, some earning over $200 per acre while the land sits idle.

RELATED: AI isn’t feeding you

Photo by Brian Kaiser/Bloomberg via Getty Images

Photo by Brian Kaiser/Bloomberg via Getty Images

Those acres could support hundreds of cattle per section or produce millions of tons of hay. Instead, they create artificial shortages that drive up feed costs. During the post-COVID inflation spike, hay prices spiked 40%, hitting $250 per ton this year. Even now, inflated prices cost ranchers six figures a year in extra expenses in a business that operates on thin margins.

If the nation needs a new Manhattan Project, it should be for food security, not AI slop. Free up federal lands and idle CRP acreage for productive use. Help ranchers grow herds and lower food prices instead of subsidizing a speculative industry already bloated with venture capital and hype.

At present, every dollar of revenue at OpenAI costs roughly $7.77 to generate — a debt spiral that invites the next taxpayer bailout. By granting these firms privileged access to public land, the government risks creating another class of untouchable corporate wards, as it did with Fannie Mae and Freddie Mac two decades ago.

AI won’t feed Americans. It won’t fix supply chains. It won’t lower grocery bills. Until these companies can put real food on real tables, federal land should serve the purpose God intended — to sustain the people who live and work upon it.

AI can fake a face — but not a soul

The New York Times recently profiled Scott Jacqmein, an actor from Dallas who sold his likeness to TikTok for $750 and a free trip to the Bay Area. He hasn’t landed any TV shows, movies, or commercials, but his AI-generated likeness has — a virtual version of Jacqmein is now “acting” in countless ads on TikTok. As the Times put it, Jacqmein “fields one or two texts a week from acquaintances and friends who are pretty sure they have seen him pitching a peculiar range of businesses on TikTok.”

Now, Jacqmein “has regrets.” But why? He consented to sell his likeness. His image isn’t being used illegally. He wanted to act, and now — at least digitally — he’s acting. His regrets seem less about ethics and more about economics.

The more perfect the imitation, the greater the lie. What people crave isn’t flawless illusion — it’s authenticity.

Times reporter Sapna Maheshwari suggests as much. Her story centers on the lack of royalties and legal protections for people like Jacqmein.

She also raises moral concerns, citing examples where digital avatars were used to promote objectionable products or deliver offensive messages. In one case, Jacqmein’s AI double pitched a “male performance supplement.” In another, a TikTok employee allegedly unleashed AI avatars reciting passages from Hitler’s “Mein Kampf.” TikTok removed the tool that made the videos possible after CNN brought the story to light.

When faces become property

These incidents blur into a larger problem — the same one raised by deepfakes. In recent months, digital impostors have mimicked public figures from Bishop Robert Barron to the pope. The Vatican itself has had to denounce fake homilies generated in the likeness of Leo XIV. Such fabrications can mislead, defame, or humiliate.

But the deepest problem with digital avatars isn’t that they deceive. It’s that they aren’t real.

Even if Jacqmein were paid handsomely and religious figures embraced synthetic preaching as legitimate evangelism, something about the whole enterprise would remain wrong. Selling one’s likeness is a transaction of the soul. It’s unsettling because it treats what’s uniquely human — voice, gesture, and presence — as property to be cloned and sold.

When a person licenses his “digital twin,” he doesn’t just part with data. He commodifies identity itself. The actor’s expressions, tone, and mannerisms become a bundle of intellectual property. Someone else owns them now.

That’s why audiences instinctively recoil at watching AI puppets masquerade and mimic people. Even if the illusion is technically impressive, it feels hollow. A digital replica can’t evoke the same moral or emotional response as a real human being.

Selling the soul

This isn’t a new theme in art or philosophy. In a classic “Simpsons” episode, Bart sells his soul to his pal Milhouse for $5 and soon feels hollow, haunted by nightmares, convinced he’s lost something essential. The joke carries a metaphysical truth: When we surrender what defines us as human — even symbolically — we suffer a real loss.

For those who believe in an immortal soul, as Jesuit philosopher Robert Spitzer argues in “Science at the Doorstep to God,” this loss is more than psychological. To sell one’s likeness is to treat the image of the divine within as a market commodity. The transaction might seem trivial — a harmless digital contract — but the symbolism runs deep.

Oscar Wilde captured this inversion of morality in “The Picture of Dorian Gray.” His protagonist stays eternally young while his portrait, the mirror of his soul, decays. In our digital age, the roles are reversed: The AI avatar remains young and flawless while the human model ages, forgotten and spiritually diminished.

Jacqmein can’t destroy his portrait. It’s contractually owned by someone else. If he wants to stop his digital self from hawking supplements or energy drinks, he’ll need lawyers — and he’ll probably lose. He’s condemned to watch his AI double enjoy a flourishing career while he struggles to pay rent. The scenario reads like a lost episode of “Black Mirror” — a man trapped in a parody of his own success. (In fact, “The Waldo Moment” and “Hang the DJ” come close to this.)

RELATED: Cybernetics promised a merger of human and computer. Then why do we feel so out of the loop?

Photo by imaginima via Getty Images

Photo by imaginima via Getty Images

The moral exit

The conventional answer to this dilemma is regulation: copyright reforms, consent standards, watermarking requirements. But the real solution begins with refusal. Actors shouldn’t sell their avatars. Consumers shouldn’t support platforms that replace people with synthetic ghosts.

If TikTok and other media giants populate their feeds with digital clones, users should boycott them and demand “fair-trade human content.” Just as conscientious shoppers insist on buying ethically sourced goods, viewers should insist on art and advertising made by living, breathing humans.

Tech evangelists argue that AI avatars will soon become indistinguishable from the people they’re modeled on. But that misses the point. The more perfect the imitation, the greater the lie. What people crave isn’t flawless illusion — it’s authenticity. They want to see imperfection, effort, and presence. They want to see life.

If we surrender that, we’ll lose something far more valuable than any acting career or TikTok deal. We’ll lose the very thing that makes us human.

Artificial intelligence is not your friend

Half of Americans say they are lonely and isolated — and artificial intelligence is stepping into the void.

Sam Altman recently announced that OpenAI will soon provide erotica for lonely adults. Mark Zuckerberg envisions a future in which solitary people enjoy AI friends. According to the Harvard Business Review, the top uses for large language models are therapy and companionship.

Lonely people don’t need better algorithms. We need better friends — and the courage to be one.

It’s easy to see why this is happening. AI is always available, endlessly patient, and unfailingly agreeable. Millions now pour their secrets into silicon confidants, comforted by algorithms that respond with affirmation and tact.

But what masquerades as friendship is, in fact, a dangerous substitute. AI therapy and friendship burrow us deeper into ourselves when what we most need is to reach out to others.

As Jordan Peterson once observed, “Obsessive concern with the self is indistinguishable from misery.” That is the trap of AI companionship.

Hall of mirrors

AI echoes back your concerns, frames its answers around your cues, and never asks anything of you. At times, it may surprise you with information, but the conversation still runs along tracks you have laid. In that sense, every exchange with AI is solipsistic — a hall of mirrors that flatters the self but never challenges it.

It can’t grow with you to become more generous, honorable, just, or patient. Ultimately, every interaction with AI cultivates a narrow self-centeredness that only increases loneliness and unhappiness.

Even when self-reflection is necessary, AI falls short. It cannot read your emotions, adjust its tone, or provide physical comfort. It can’t inspire courage, sit beside you in silence, or offer forgiveness. A chatbot can only mimic what it has never known.

Most damaging of all, it can’t truly empathize. No matter what words it generates, it has never suffered loss, borne responsibility, or accepted love. Deep down, you know it doesn’t really understand you.

With AI, you can talk all you want. But you will never be heard.

Humans need love, not algorithms

Humans are social animals. We long for love and recognition from other humans. The desire for friendship is natural. But people are looking where no real friend can be found.

Aristotle taught that genuine friendship is ordered toward a common good and requires presence, sacrifice, and accountability. Unlike friendships of utility or pleasure — which dissolve when benefit or amusement fades — true friendship endures, because it calls each person to become better than they are.

Today, the word “friend” is often cheapened to a mere social-media connection, making Aristotelian friendship — rooted in virtue and sacrifice — feel almost foreign. Yet it comes alive in ancient texts, which show the heights that true friendship can inspire.

Real friendships are rooted in ideals older than machines and formed through shared struggles and selfless giving.

In Homer’s “Iliad,” Achilles and Patroclus shared an unbreakable bond forged in childhood and through battle. When Patroclus was killed, Achilles’ rage and grief changed the course of the Trojan War and of history. The Bible describes the friendship of Jonathan and David, whose devotion to one another, to their people, and to God transcended ambition and even family ties: “The soul of Jonathan was knit with the soul of David.”

These friendships were not one-sided projections. They were built upon shared experiences and selflessness that artificial intelligence can never offer.

Each time we choose the easy route of AI companionship over the hard reality of human relationships, we render ourselves less available and less able to achieve the true friendship our ancestors enjoyed.

Recovering genuine friendship requires forming people who are capable of being friends. People must be taught how to speak, listen, and seek truth together — something our current educational system has largely forgotten.

Classical education offers a remedy, reviving these habits of human connection by immersing students in the great moral and philosophical conversations of the past. Unlike modern classrooms, where students passively absorb information, classical seminars require them to wrestle together over what matters most: love in Plato’s “Symposium,” restlessness in Augustine’s “Confessions,” loss in Virgil’s “Aeneid,” or reconciliation in Shakespeare’s “King Lear.”

These dialogues force students to listen carefully, speak honestly, and allow truth — not ego — to guide the exchange. They remind us that friendship is not built on convenience but on mutual searching, where each participant must give as well as receive.

Reclaiming humanity

In a world tempted by the frictionless ease of talking to machines, classical education restores human encounters. Seminars cultivate the courage to confront discomfort, admit error, and grapple with ideas that challenge our assumptions — a rehearsal for the moral and social demands of real friendship.

RELATED: MIT professor’s 4 critical steps to stop AI from hijacking humanity

Photo by Yuichiro Chino via Getty Images

Photo by Yuichiro Chino via Getty Images

Is classroom practice enough for friendship? No. But it plants the seeds. Habits of conversation, humility, and shared pursuit of truth prepare students to form real friendships through self-sacrifice outside the classroom: to cook for an exhausted co-worker, to answer the late-night call for help, to lovingly tell another he or she is wrong, to simply be present while someone grieves.

It’s difficult to form friendships in the modern world, where people are isolated in their homes, occupied by screens, and vexed by distractions and schedules. Technology tempts us with the illusion of effortless companionship — someone who is always where you are, whenever you want to talk. Like all fantasies, it can be pleasant for a time. But it’s not real.

Real friendships are rooted in ideals older than machines and formed through shared struggles and selfless giving.

Lonely people don’t need better algorithms. We need better friends — and the courage to be one.

Editor’s note: This article was published originally in the American Mind.

Ai • Artificial intelligence • Blaze Media • Chatbot • Return • Tech

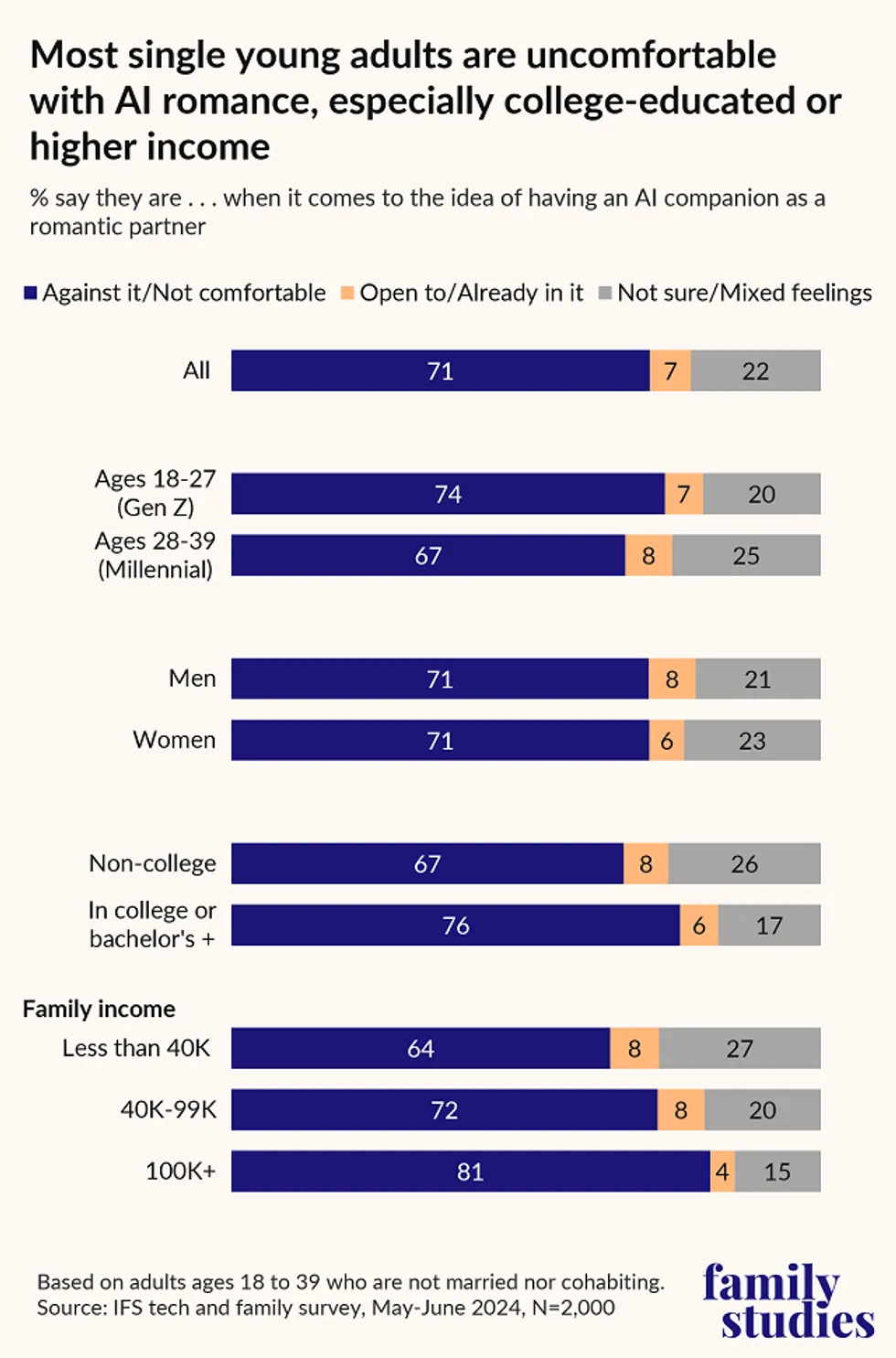

Liberals, heavy porn users more open to having an AI friend, new study shows

A small but significant percentage of Americans say they are open to having a friendship with artificial intelligence, while some are even open to romance with AI.

The figures come from a new study by the Institute for Family Studies and YouGov, which surveyed American adults under 40 years old. Their data revealed that while very few young Americans are already friends with some sort of AI, about 10 times that amount are open to it.

‘It signals how loneliness and weakened human connection are driving some young adults.’

Just 1% of Americans under 40 who were surveyed said they were already friends with an AI. However, a staggering 10% said they are open to the idea. With 2,000 participants surveyed, that’s 200 people who said they might be friends with a computer program.

Liberals said they were more open to the idea of befriending AI (or are already in such a friendship) than conservatives were, to the tune of 14% of liberals vs. 9% of conservatives.

The idea of being in a “romantic” relationship with AI, not just a friendship, again produced some troubling — or scientifically relevant — responses.

When it comes to young adults who are not married or “cohabitating,” 7% said they are open to the idea of being in a romantic partnership with AI.

At the same time, a larger percentage of young adults think that AI has the potential to replace real-life romantic relationships; that number sits at a whopping 25%, or 500 respondents.

There exists a large crossover with frequent pornography users, as the more frequently one says they consume online porn, the more likely they are to be open to having an AI as a romantic partner, or are already in such a relationship.

Only 5% of those who said they never consume porn, or do so “a few times a year,” said they were open to an AI romantic partner.

That number goes up to 9% for those who watch porn between once or twice a month and several times per week. For those who watch online porn daily, the number was 11%.

Overall, young adults who are heavy porn users were the group most open to having an AI girlfriend or boyfriend, in addition to being the most open to an AI friendship.

RELATED: The laws freaked-out AI founders want won’t save us from tech slavery if we reject Christ’s message

Graphic courtesy Institute for Family Studies

Graphic courtesy Institute for Family Studies

“Roughly one in 10 young Americans say they’re open to an AI friendship — but that should concern us,” Dr. Wendy Wang of the Institute for Family Studies told Blaze News.

“It signals how loneliness and weakened human connection are driving some young adults to seek emotional comfort from machines rather than people,” she added.

Another interesting statistic to take home from the survey was the fact that young women were more likely than men to perceive AI as a threat in general, with 28% agreeing with the idea vs. 23% of men. Women are also less excited about AI’s effect on society; just 11% of women were excited vs. 20% of men.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

search

calander

| M | T | W | T | F | S | S |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 | 31 | |||||

categories

Archives

navigation

Recent posts

- Gavin Newsom Laughs Off Potential Face-Off With Kamala In 2028: ‘That’s Fate’ If It Happens February 23, 2026

- Trump Says Netflix Should Fire ‘Racist, Trump Deranged’ Susan Rice February 23, 2026

- Americans Asked To ‘Shelter In Place’ As Cartel-Related Violence Spills Into Mexican Tourist Hubs February 23, 2026

- Chaos Erupts In Mexico After Cartel Boss ‘El Mencho’ Killed By Special Forces February 23, 2026

- First Snow Arrives With Blizzard Set To Drop Feet Of Snow On Northeast February 23, 2026

- Chronological Snobs and the Founding Fathers February 23, 2026

- Remembering Bill Mazeroski and Baseball’s Biggest Home Run February 23, 2026