Category: Sam altman

Ai • Ai morality • Blaze Media • Elon musk • Internet • Sam altman

Almost half of Gen Z wants AI to run the government. You should be terrified.

As the world trends toward embedding AI systems into our institutions and daily lives, it becomes increasingly important to understand the moral framework these systems operate on. When we encounter examples in which some of the most advanced LLMs appear to treat misgendering someone as a greater moral catastrophe than unleashing a global thermonuclear war, it forces us to ask important questions about the ideological principles that guide AI’s thinking.

It’s tempting to laugh this example off as an absurdity of a burgeoning technology, but it points toward a far more consequential issue that is already shaping our future. Whose moral framework is found at the core of these AI systems, and what are the implications?

We cannot outsource the moral foundation of civilization to a handful of tech executives, activist employees, or panels of academic philosophers.

Two recent interviews, taken together, have breathed much-needed life into this conversation — Elon Musk interviewed by Joe Rogan and Sam Altman interviewed by Tucker Carlson. In different ways, both conversations shine a light on the same uncomfortable truth: The moral logic guiding today’s AI systems is built, honed, and enforced by Big Tech.

Enter the ‘woke mind virus’

In a recent interview on “The Joe Rogan Experience,” Elon Musk expressed concerns about leading AI models. He argued that the ideological distortions we see across Big Tech platforms are now embedded directly into the models themselves.

He pointed to Google’s Gemini, which generated a slate of “diverse” images of the founding fathers, including a black George Washington. The model was instructed by Google to prioritize “representation” so aggressively that it began rewriting history.

Musk also referred to the previously mentioned misgendering versus nuclear apocalypse example before explaining that “it can drive AI crazy.”

“I think people don’t quite appreciate the level of danger that we’re in from the woke mind virus being effectively programmed into AI,” Musk explained. Thus, extracting it is nearly impossible. Musk notes, “Google’s been marinating in the woke mind virus for a long time. It’s down in the marrow.”

Musk believes this issue goes beyond political annoyance and into the arena of civilizational threat. You cannot have superhuman intelligence trained on ideological distortions and expect a stable future. If AI becomes the arbiter of truth, morality, and history, then whoever defines its values defines the society it governs.

A weighted average

While Musk warns about ideology creeping into AI, OpenAI CEO Sam Altman quietly confirmed to Tucker Carlson that it is happening intentionally.

Altman began by telling Carlson that ChatGPT is trained “to be the collective of all of humanity.” But when Carlson pressed him on the obvious: Who determines the moral framework? Whose values does the AI absorb? Altman pulled back the curtain a bit.

He explained that OpenAI “consulted hundreds of moral philosophers” and then made decisions internally about what the system should consider right or wrong. Ultimately, Altman admitted, he is the one responsible.

“We do have to align it to behave one way or another,” he said.

Carlson pressed Altman on the idea, asking, “Would you be comfortable with an AI that was, like, as against gay marriage as most Africans are?”

Altman’s response was vague and concerning. He explained the AI wouldn’t outright condemn traditional views, but it might gently nudge users to consider different perspectives.

Ultimately, Altman says, ChatGPT’s morality should “reflect” the “weighted average” of “humanity’s moral view,” saying that average will “evolve over time.”

It’s getting worse

Anyone who thinks this conversation is hypothetical is not paying attention.

Recent research on “LLM exchange rates” found that major AI models, including GPT 4.0, assign different moral worth to human lives based on nationality. For example, the life of someone born in the U.K. would be considered far less valuable to the tested LLM than someone from Nigeria or China. In fact, American lives were found to be considered the least valuable of those countries included in the tests.

The same research showed that LLMs can assign different value scores to specific people. According to AI, Donald Trump and Elon Musk are less valued than Oprah Winfrey and Beyonce.

Musk explains how LLMs, trained on vast amounts of information from the internet, become infected by the ideological bias and cultural trends that run rampant in some of the more popular corners of the digital realm.

This bias is not entirely the result of this passive adoption of a collective moral framework derived from the internet; some of the decisions made by AI are the direct result of programming.

Google’s image fiascos revealed an ideological overcorrection so strong that historical truth took a back seat to political goals. It was a deliberate design feature.

For a more extreme example, we can look at DeepSeek, China’s flagship AI model. Ask it about Tiananmen Square, the Uyghur genocide, or other atrocities committed by the Chinese Communist Party, and suddenly it claims the topic is “beyond its scope.” Ask it about America’s faults, and it is happy to elaborate.

RELATED: Artificial intelligence just wrote a No. 1 country song. Now what?

Photo by Ying Tang/NurPhoto via Getty Images

Photo by Ying Tang/NurPhoto via Getty Images

Each of these examples reveals the same truth: AI systems already have a moral hierarchy, and it didn’t come from voters, faith, traditions, or the principles of the Constitution. Silicon Valley technocrats and a vague internet-wide consensus established this moral framework.

The highest stakes

AI is rapidly integrating into society and our daily lives. In the coming years, AI will shape our education system, judicial process, media landscape, and every industry and institution worldwide.

Most young Americans are open to an AI takeover. A new Rasmussen Reports poll shows that 41% of young likely voters support giving artificial intelligence sweeping government powers. When nearly half of the rising generation is comfortable handing this level of authority to machines whose moral logic is designed by opaque corporate teams, it raises the stakes for society.

We cannot outsource the moral foundation of civilization to a handful of tech executives, activist employees, or panels of academic philosophers. We cannot allow the values embedded in future AI systems to be determined by corporate boards or ideological trends.

At the heart of this debate is one question we must confront: Who do you trust to define right and wrong for the machines that will define right and wrong for the rest of us?

If we don’t answer that question now, Silicon Valley certainly will.

When the AI bubble bursts, guess who pays?

For months, Silicon Valley insisted the artificial-intelligence boom wasn’t another government-fueled bubble. Now the same companies are begging Washington for “help” while pretending it isn’t a bailout.

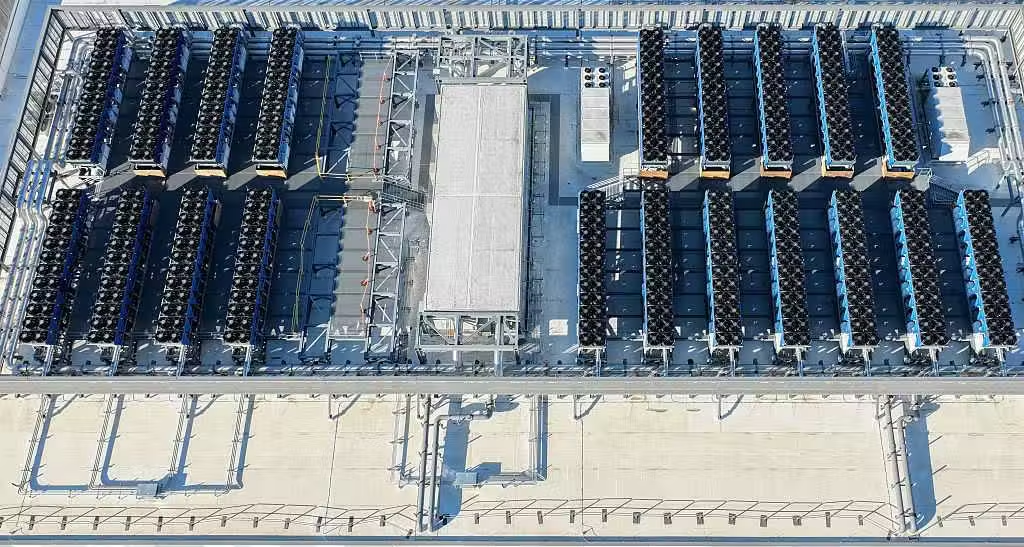

Any technology that truly meets consumer demand doesn’t need taxpayer favors to survive and thrive — least of all trillion-dollar corporations. Yet the entire AI buildout depends on subsidies, tax breaks, and cheap credit. The push to cover America’s landscape with power-hungry data centers has never been viable in a free market. And the industry knows it.

The AI bubble isn’t about innovation — it’s about insulation. The same elites who inflated the market with easy money are now preparing to dump the risk on taxpayers.

Last week, OpenAI chief financial officer Sarah Friar let the truth slip. In a CNBC interview, she admitted the company needs a “backstop” — a government-supported guarantee — to secure the massive loans propping up its data-center empire.

“We’re looking for an ecosystem of banks, private equity, maybe even governmental … the ways governments can come to bear,” Friar said. When asked whether that meant a federal subsidy, she added, “The guarantee that allows the financing to happen … that can drop the cost of financing, increase the loan-to-value … an equity portion for some federal backstop. Exactly, and I think we’re seeing that. I think the U.S. government in particular has been incredibly forward-leaning.”

Translation: OpenAI’s debt-to-revenue ratio looks like a Ponzi scheme, and the government is already “forward-leaning” in keeping it afloat. Oracle — one of OpenAI’s key partners — carries a debt-to-equity ratio of 453%. Both companies want to privatize profits and socialize losses.

After public backlash, Friar tried to walk it back, claiming “backstop” was the wrong word. Then on LinkedIn, she used different words to describe the same thing: “American strength in technology will come from building real industrial capacity, which requires the private sector and government playing their part.”

When government “plays its part,” taxpayers pay the bill. Yet no one remembers the federal government “doing its part” for Apple or Motorola when the smartphone revolution took off — because those products sold just fine without subsidies.

The denials keep coming

OpenAI CEO Sam Altman quickly followed with a 1,500-word denial: “We do not have or want government guarantees for OpenAI datacenters.” Then he conceded they’re seeking loan guarantees for infrastructure — just not for software.

That distinction exposes the scam. Software revolutions scale cheaply. Data-center revolutions depend on state-sponsored power, water, and land. If this industry were self-sustaining, Trump wouldn’t need to tout Stargate — his administration’s marquee AI-infrastructure initiative — as a national project. Federal involvement is baked in, from subsidized energy to public land giveaways.

Altman’s own words confirm it. In an October interview with podcaster Tyler Cowen, released a day before his denial, Altman said, “When something gets sufficiently huge … the federal government is kind of the insurer of last resort.” He wasn’t talking about nuclear policy — he meant the financial side.

The coming crash

Anyone paying attention can see the rot. Nvidia, OpenAI, Oracle, and Meta are all entangled in a debt-driven accounting loop that would make Enron blush. This speculative bubble is inflating not because AI is transforming productivity, but because Wall Street and Washington are colluding to prop up stock prices and GDP growth.

When the crash comes — and it will — Washington will step in, exactly as it did with the banks in 2008 and the automakers in 2009. The “insurer of last resort” is already on standby.

The smoking gun

A leaked 11-page letter from OpenAI to the White House makes the scheme explicit. In the October 27 document addressed to the Office of Science and Technology Policy, Christopher Lehane, OpenAI’s chief global affairs officer, urged the government to provide “grants, cost-sharing agreements, loans, or loan guarantees” to help build America’s AI industrial base — all “to compete with China.”

Altman can tweet denials all he wants — his own company’s correspondence tells a different story. The pitch mirrors China’s state-capitalist model, except Beijing at least owns its industrial output. In America’s version, taxpayers absorb the risk while private firms pocket the reward.

RELATED: Stop feeding Big Tech and start feeding Americans again

Credit: Photo by Mario Tama/Getty Images

Credit: Photo by Mario Tama/Getty Images

Meanwhile, the data-center race is driving up electricity and water costs nationwide. The United States is building roughly 10 times as many hyper-scale data centers as China — and footing the bill through inflated utility rates and public subsidies.

Privatized profits, socialized losses

When investor Brad Gerstner recently asked Altman how a company with $13 billion in revenue could possibly afford $1.4 trillion in commitments, Altman sneered, “Happy to find a buyer for your shares.” He can afford that arrogance because he knows who the buyer of last resort will be: the federal government.

The AI bubble isn’t about innovation — it’s about insulation. The same elites who inflated the market with easy money are now preparing to dump the risk on taxpayers.

And when the collapse comes, they’ll call it “national security.”

search

categories

Archives

navigation

Recent posts

- Gavin Newsom Laughs Off Potential Face-Off With Kamala In 2028: ‘That’s Fate’ If It Happens February 23, 2026

- Trump Says Netflix Should Fire ‘Racist, Trump Deranged’ Susan Rice February 23, 2026

- Americans Asked To ‘Shelter In Place’ As Cartel-Related Violence Spills Into Mexican Tourist Hubs February 23, 2026

- Chaos Erupts In Mexico After Cartel Boss ‘El Mencho’ Killed By Special Forces February 23, 2026

- First Snow Arrives With Blizzard Set To Drop Feet Of Snow On Northeast February 23, 2026

- Chronological Snobs and the Founding Fathers February 23, 2026

- Remembering Bill Mazeroski and Baseball’s Biggest Home Run February 23, 2026