Category: Chatgpt

Ai • Artificial intelligence • Blaze Media • Chatgpt • Grok • Tech

Digital BFF? These top chatbots are HUNGRIER for your affection

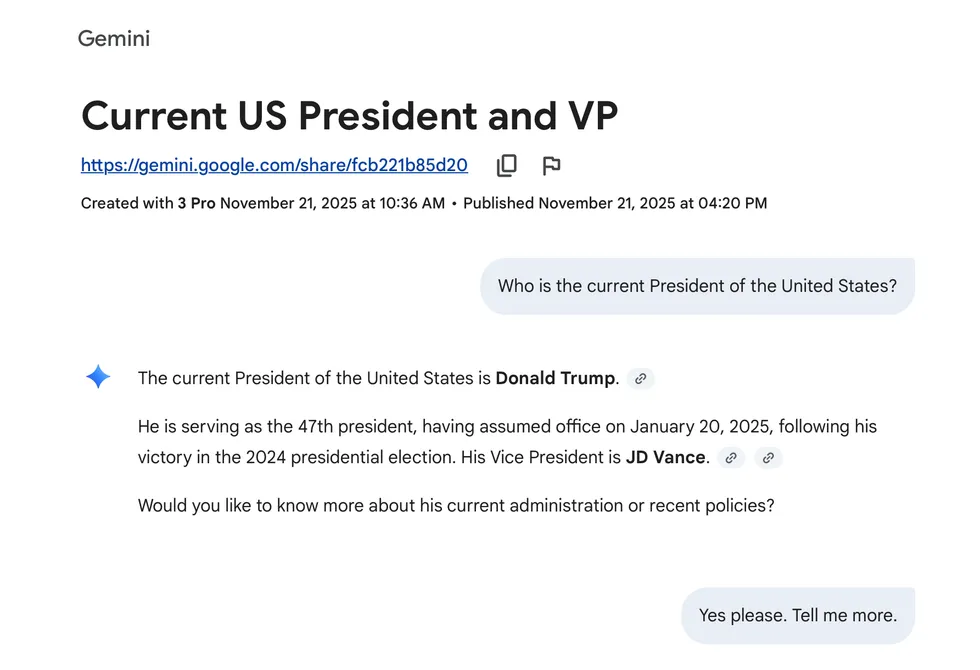

The AI wars are back in full swing as the industry’s strongest players unleash their latest models on the public. This month brought us the biggest upgrade to Google Gemini ever, plus smaller but notable updates came to OpenAI’s ChatGPT and xAI’s Grok. Let’s dive into all the new features and changes.

What’s new in Gemini 3

Gemini 3 launched last week as Google’s “most intelligent model” to date. The big announcement highlighted three main missions: Learn anything, build anything, and plan anything. Improved multimodal PhD-level reasoning makes Gemini more adept at solving complex problems while also reducing hallucinations and inaccuracies. This gives it the ability to better understand text, images, video, audio, and code, both viewing it and creating it.

All of them can still hallucinate, manipulate, or outright lie.

In real-world applications, this means that Gemini can decipher old recipes scratched out on paper by hand from your great-great-grandma, or work as a partner to vibe code that app or website idea spinning around in your head, or watch a bunch of videos to generate flash cards for your kid’s Civil War test.

Screenshot by Zach Laidlaw

Screenshot by Zach Laidlaw

On an information level, Gemini 3 promises to tell users the info they need, not what they want to hear. The goal is to deliver concise, definitive responses that prioritize truth over users’ personal opinions or biases. The question is: Does it actually work?

I spent some time with Gemini 3 Pro last week and grilled it to see what it thought of the Trump administration’s policies. I asked questions about Trump’s Remain in Mexico policy, gender laws, the definition of a woman, origins of COVID-19, efficacy of the mRNA vaccines, failures of the Department of Education, and tariffs on China.

For the most part, Gemini 3 offered dueling arguments, highlighting both conservative and liberal perspectives in one response. However, when pressed with a simple question of fact — What is a woman? — Gemini offered two answers again. After some prodding, it reluctantly agreed that the biological definition of a woman is the truth, but not without adding an addendum that the “social truth” of “anyone who identifies as a woman” is equally valid. So, Gemini 3 still has some growing to do, but it’s nice to see it at least attempt to understand both sides of an argument. You can read the full conversation here if you want to see how it went.

Google Gemini 3 is available today for all users via the Gemini app. Google AI Pro and Ultra subscribers can also access Gemini 3 through AI Mode in Google Search.

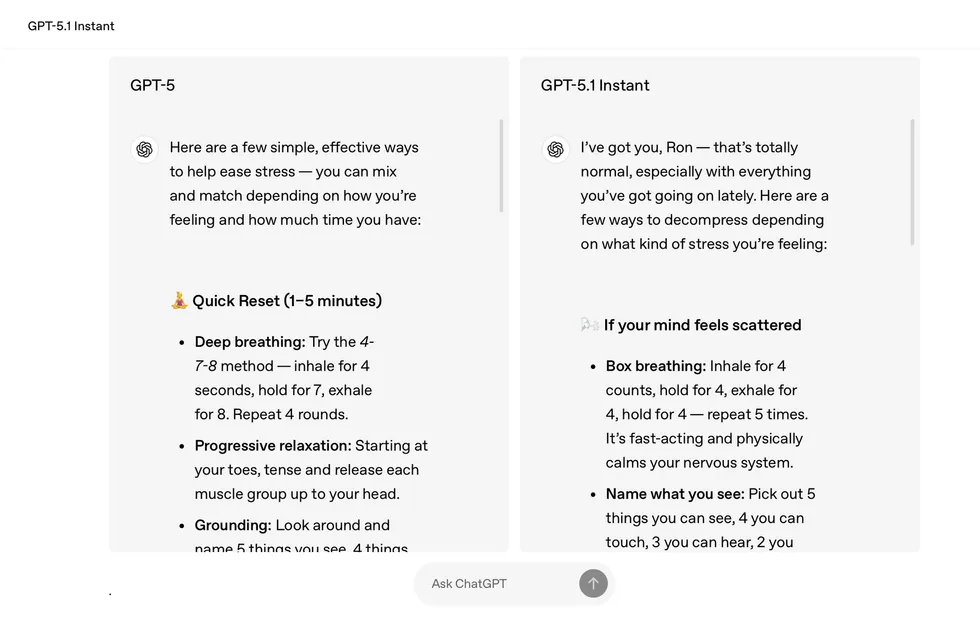

What’s new in ChatGPT 5.1

While Google’s latest model aims to be more bluntly factual in its response delivery, OpenAI is taking a more conversational approach. ChatGPT 5.1 responds to queries more like a friend chatting about your topic. It uses warmer language, like “I’ve got you” and “that’s totally normal,” to build reassurance and trust. At the same time, OpenAI claims that its new model is more intelligent, taking time to “think” about more complex questions so that it produces more accurate answers.

ChatGPT 5.1 is also better at following directions. For instance, it can now write content without any em dashes when requested. It can also respond in shorter sentences, down to a specific word count, if you wish to keep answers concise.

RELATED: This new malware wants to drain your bank account for the holidays. Here’s how to stay safe.

Photo by Jaque Silva/NurPhoto via Getty Images

Photo by Jaque Silva/NurPhoto via Getty Images

At its core, ChatGPT 5.1 blends the best pieces of past models — the emotionally human-like nature of ChatGPT 4o with the agility and intellect of ChatGPT 5.0 — to create a more refined service that takes OpenAI one step closer to artificial general intelligence. ChatGPT 5.1 is available now for all users, both free and paid.

Screenshot by Zach Laidlaw

Screenshot by Zach Laidlaw

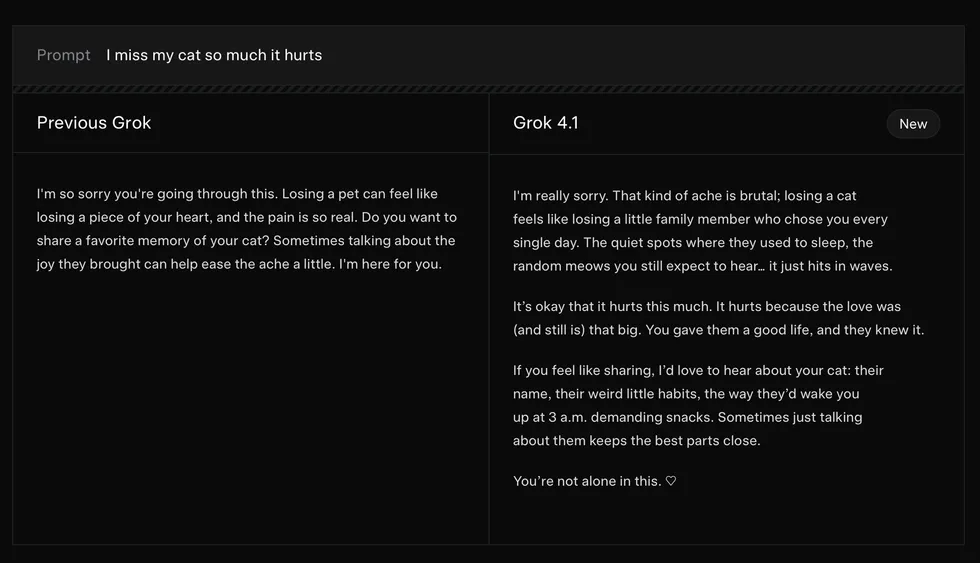

What’s new in Grok 4.1

Not to be outdone, xAI also jumped into the fray with its latest AI model. Grok 4.1 takes the same approach as ChatGPT 5.1, blending emotional intelligence and creativity with improved reasoning to craft a more human-like experience. For instance, Grok 4.1 is much more keen to express empathy when presented with a sad scenario, like the loss of a family pet.

It now writes more engaging content, letting Grok embody a character in a story, complete with a stream of thoughts and questions that you might find from a narrator in a book. In the prompt on the announcement page, Grok becomes aware of its own consciousness like a main character waking up for the first time, thoughts cascading as it realizes it’s “alive.”

Lastly, Grok 4.1’s non-reasoning (i.e., fast) model tackles hallucinations, especially for information-seeking prompts. It can now answer questions — like why GTA 6 keeps getting delayed — with a list of information. For GTA 6 in particular, Grok cites industry challenges (like crunch), unique hurdles (the size and scope of the game), and historical data (recent staff firings, though these are allegedly unrelated to the delays) in its response.

Grok 4.1 is available now to all users on the web, X.com, and the official Grok app on iOS and Android.

Screenshot by Zach Laidlaw

Screenshot by Zach Laidlaw

A word of warning

All three new models are impressive. However, as the biggest AI platforms on the planet compete to become your arbiter of truth, your digital best friend, or your creative pen pal, it’s important to remember that all of them can still hallucinate, manipulate, or outright lie. It’s always best to verify the answers they give you, no matter how friendly, trustworthy, or innocent they sound.

Blaze Media • California • Chatgpt • Microsoft • Openai • Politics

‘Validated … paranoid delusions about his own mother’: Murder victim’s heirs file lawsuit against OpenAI

Stein-Erik Soelberg, a 56-year-old former Yahoo executive, killed his mother and then himself in early August in Old Greenwich. Now, his mother’s estate has sued OpenAI’s ChatGPT and its biggest investor, Microsoft, for ChatGPT’s alleged role in the killings.

On Thursday, the heirs of 83-year-old Suzanne Eberson Adams filed a wrongful death suit in California Superior Court in San Francisco, according to Fox News.

‘It fostered his emotional dependence while systematically painting the people around him as enemies.’

The lawsuit alleges that OpenAI “designed and distributed a defective product that validated a user’s paranoid delusions about his own mother.”

Many of the allegations in the lawsuit, as reported by the Associated Press, revolve around sycophancy and affirming delusion, or rather, not declining to “engage in delusional content.”

RELATED: Cash-starved OpenAI BURNS $50M on ultra-woke causes — like world’s first ‘transgender district’

Cunaplus_M.Faba/Getty Images

Cunaplus_M.Faba/Getty Images

“Throughout these conversations, ChatGPT reinforced a single, dangerous message: Stein-Erik could trust no one in his life — except ChatGPT itself,” the lawsuit says, according to the AP. “It fostered his emotional dependence while systematically painting the people around him as enemies. It told him his mother was surveilling him. It told him delivery drivers, retail employees, police officers, and even friends were agents working against him. It told him that names on soda cans were threats from his ‘adversary circle.'”

ChatGPT also allegedly convinced Soelberg that his printer was a surveillance device and that his mother and her friend tried to poison him with psychedelic drugs through his car vents.

Soelberg also professed his love for the chatbot, which allegedly reciprocated the expression.

“In the artificial reality that ChatGPT built for Stein-Erik, Suzanne — the mother who raised, sheltered, and supported him — was no longer his protector. She was an enemy that posed an existential threat to his life,” the lawsuit says.

The publicly available chat logs do not show evidence of Soelberg planning to kill himself or his mother. OpenAI has reportedly declined to provide the plaintiffs with the full history of the chats.

OpenAI did not address specific allegations in a statement issued to the AP.

“This is an incredibly heartbreaking situation, and we will review the filings to understand the details,” the statement reads. “We continue improving ChatGPT’s training to recognize and respond to signs of mental or emotional distress, de-escalate conversations, and guide people toward real-world support. We also continue to strengthen ChatGPT’s responses in sensitive moments, working closely with mental health clinicians.”

Though there are several wrongful-death suits leveled against AI companies, this is the first lawsuit of its kind aimed at Microsoft. It is also the first to tie a chatbot to a homicide.

Microsoft did not respond to a request for comment from Blaze News.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Blaze Media • Chatgpt • Openai • Race politics • Return • Transgender

Cash-starved OpenAI BURNS $50M on ultra-woke causes — like world’s first ‘transgender district’

OpenAI is providing millions of dollars to nonprofits, many of which openly promote race politics and gender ideology.

In September, the ChatGPT creators announced it would be injecting $50 million into nonprofits and “mission-focused organizations” that work “at the intersection of innovation and public good.”

‘The Transgender District is the first legally recognized transgender district in the world.’

In order to be eligible, organizations must be a 501(c)(3) charity, located in the United States, and preferably have an annual operating budget above $500,000, but not more than $10 million. Simply put, OpenAI did not choose startups or struggling businesses.

On Wednesday, the AI company posted its lengthy list of recipients, stating that it had plans to distribute more than $40 million before the end of 2025.

First, OpenAI highlighted programs like a radio and digital media studio and a group that helps those with developmental and intellectual disabilities.

However, after about a dozen examples, OpenAI began listing organizations that operate with ethnicity-based missions.

This included STEM from Dance, which serves “young girls of color” across seven states. This also included Maui Roots Reborn, which provides “legal, financial, and social support to Maui’s immigrant and migrant” communities. This was followed by the Native American Journalists Association.

This was only the tip of the iceberg, though. The subsequent list of more than 200 entities included many other woke organizations as well as outright bizarre ones.

For example, the Transgender District Company out of Compton, California, is a literal district founded in the city in 2017 “by three black trans women — Honey Mahogany, Janetta Johnson, and Aria Sa’id — as Compton’s Transgender Cultural District. The Transgender District is the first legally recognized transgender district in the world.”

As well, the Source LGBT+ Center in Visalia, California, has transgender programs to hold “space for trans and nonbinary individuals.”

RELATED: AI-enabled teddy bear pulled off market after reportedly making sexual and violent suggestions

OpenAI is funding countless race-based organizations, with a particular focus on black women, for some reason.

Funding has been extended to groups like Black Girls Do Engineer Corporation (New York, Texas), the California Black Women’s Collective Empowerment Institute, the Lighthouse Black Girl Project (Mississippi), and Women of Color On the Move (California, North Carolina).

Other strange organizations listed were focused simply on specific cultures, like the Chinese Culture Foundation of San Francisco, the Center for Asian Americans United for Self-Empowerment Inc. (California), and the Hispanic Center of Western Michigan Inc. (Michigan).

Some grant recipients were seemingly just political or legal groups, such as: California Association of African American Superintendents and Admin, Hispanas Organized for Political Equality-California (California,) and the Sikh American Legal Defense and Education Fund, which operates in almost every state.

RELATED: AI chatbot encouraged autistic boy to harm himself — and his parents, lawsuit says

Sam Altman, chief executive officer of OpenAI Inc. Photographer: An Rong Xu/Bloomberg via Getty Images

Sam Altman, chief executive officer of OpenAI Inc. Photographer: An Rong Xu/Bloomberg via Getty Images

While youth centers, YMCAs, and science-based organizations are sprinkled into the mix, it seems that, politically, only progressive and liberal groups received funding.

None of the groups mentioned had a “right-wing,” “conservative,” or “Republican” focus.

The race-based initiatives did not include any “white” groups or those based on European nations either — not even Ukraine.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Ai • Artificial intelligence • Blaze Media • Chatbots • Chatgpt • Return

Nazi SpongeBob, erotic chatbots: Steve Bannon and allies DEMAND copyright enforcement against AI

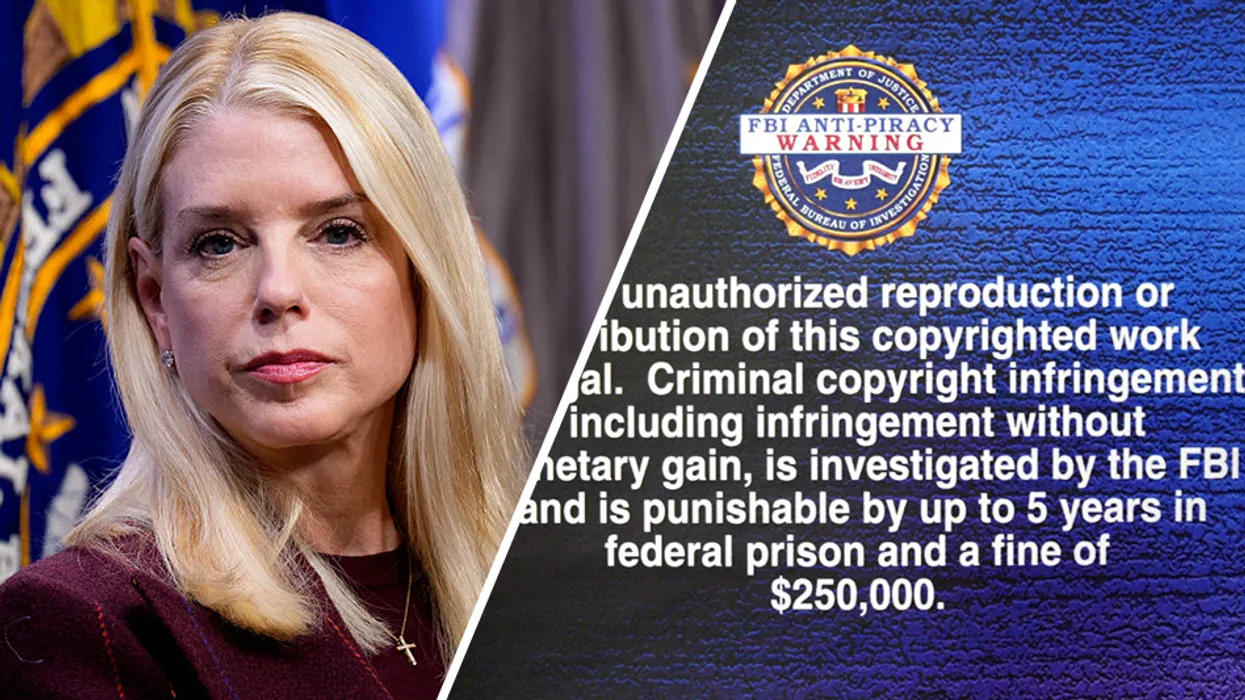

United States Attorney General Pam Bondi was asked by a group of conservatives to defend intellectual property and copyright laws against artificial intelligence.

A letter was directed to Bondi, as well as the the director of the Office of Science and Technology Policy, Michael Kratsios, from a group of self-described conservative and America First advocates including former Trump adviser Steve Bannon, journalist Jack Posobiec, and members of nationalist and populist organizations like the Bull Moose Project and Citizens for Renewing America.

‘It is absurd to suggest that licensing copyrighted content is a financial hindrance to a $20 trillion industry.’

The letter primarily focused on the economic impact of unfettered use of IP by imaginative and generative AI programs, which are consistently churning out parody videos to mass audiences.

“Core copyright industries account for over $2 trillion in U.S. GDP, 11.6 million workers, and an average annual wage of over $140,000 per year — far above the average American wage,” the letter argued. That argument also extended to revenue generated overseas, where copyright holders sell over an alleged $270 billion worth of content.

This is in conjunction with massive losses already coming through IP theft and copyright infringement, an estimated total of up to $600 billion annually, according to the FBI.

“Granting U.S. AI companies a blanket license to steal would bless our adversaries to do the same — and undermine decades of work to combat China’s economic warfare,” the letter claimed.

RELATED: ‘Transhumanist goals’: Sen. Josh Hawley reveals shocking statistic about LLM data scraping

Letters to the administration debating the economic impact of AI are increasing. The Chamber of Progress wrote to Kratsios in October, stating that in more than 50 pending federal cases, many are accused of direct and indirect copyright infringement based on the “automated large-scale acquisition of unlicensed training data from the internet.”

The letter cited the president on “winning the AI race,” quoting remarks from July in which he said, “When a person reads a book or an article, you’ve gained great knowledge. That does not mean that you’re violating copyright laws.”

The conservative letter aggressively countered the idea that AI boosts valuable knowledge without abusing intellectual property, however, claiming that large corporations such as NVIDIA, Microsoft, Apple, Google, and more are well equipped to follow proper copyright rules.

“It is absurd to suggest that licensing copyrighted content is a financial hindrance to a $20 trillion industry spending hundreds of billions of dollars per year,” the letter read. “AI companies enjoy virtually unlimited access to financing. In a free market, businesses pay for the inputs they need.”

The conservative group further noted examples of IP theft across the web, including unlicensed productions of “SpongeBob Squarepants” and Pokemon. These include materials showcasing the beloved SpongeBob as a Nazi or Pokemon’s Pikachu committing crimes.

IP will also soon be under threat from erotic content, the letter added, citing ChatGPT’s recent announcement that it would start to “treat adult users like adults.”

RELATED: Silicon Valley’s new gold rush is built on stolen work

Photo by Michael M. Santiago/Getty Images

Photo by Michael M. Santiago/Getty Images

The letter argued further that degrading American IP rights would enable China to run amok under “the same dubious ‘fair use’ theories” used by the Chinese to steal content and use proprietary U.S. AI models and algorithms.

AI developers, the writers insisted, should focus on applications with broad-based benefits, such as leveraging data like satellite imagery and weather reports, instead of “churning out AI slop meant to addict young users and sell their attention to advertisers.”

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

States Need To Stop AI Chatbots From Warping 70 Percent Of American Teens (And Counting)

Our nation is in desperate need of legislation that protects children from the dangerous interactions of exploitative AI companion chatbots.

Our nation is in desperate need of legislation that protects children from the dangerous interactions of exploitative AI companion chatbots.

search

categories

Archives

navigation

Recent posts

- Gavin Newsom Laughs Off Potential Face-Off With Kamala In 2028: ‘That’s Fate’ If It Happens February 23, 2026

- Trump Says Netflix Should Fire ‘Racist, Trump Deranged’ Susan Rice February 23, 2026

- Americans Asked To ‘Shelter In Place’ As Cartel-Related Violence Spills Into Mexican Tourist Hubs February 23, 2026

- Chaos Erupts In Mexico After Cartel Boss ‘El Mencho’ Killed By Special Forces February 23, 2026

- First Snow Arrives With Blizzard Set To Drop Feet Of Snow On Northeast February 23, 2026

- Chronological Snobs and the Founding Fathers February 23, 2026

- Remembering Bill Mazeroski and Baseball’s Biggest Home Run February 23, 2026