Category: Artificial intelligence

Affordability • Ai data center • Artificial intelligence • Big tech • Blaze Media • Chandler arizona

How data centers could spark the next populist revolt

Everyone keeps promising that artificial intelligence will deliver wonders beyond imagination — medical breakthroughs, massive productivity gains, boundless prosperity. Maybe it will. Maybe it won’t. But one outcome is already clear: If data centers keep driving up Americans’ electricity bills, AI will quickly become a political liability.

Across the country, data center expansion has already helped push electricity prices up 13% over the past year, and voters are starting to push back.

Handled correctly, AI can strengthen America. Handled poorly — by letting data centers overwhelm the grid and drive families toward energy poverty — it will accelerate decline.

In recent months, plans for massive new data centers in Virginia, Maryland, Texas, and Arizona have stalled or collapsed under local backlash. Ordinary Americans have packed town halls and flooded city councils, demanding protection from corporate projects that devour land, drain water supplies, and strain already fragile power grids.

These communities are not rejecting technology. They are rejecting exploitation. As one local official in Chandler, Arizona, told a developer bluntly, “If you can’t show me what’s in it for Chandler, then we’re not having a conversation.”

The problem runs deeper than zoning fights or aesthetics. America’s monopoly utility model shields data centers from the true cost of the strain they impose on the grid. When a facility requires new substations, transmission lines, or transformers — or when its relentless demand drives up electricity prices — utilities spread those costs across every household and small business in the service area.

That arrangement socializes the costs of Big Tech’s growth while privatizing the gains. It also breeds populist anger.

A better approach sits within reach: neighborhood battery programs that put communities first.

Whole-home battery systems continue to gain traction. Rooftop solar panels, small generators, or off-peak grid power can recharge them. Batteries store electricity when it’s cheap and abundant, then release it when demand spikes or outages hit. They protect families from blackouts, lower monthly utility bills, and sometimes allow homeowners to sell power back to the grid.

One policy shift should become non-negotiable: Approval for new data centers should hinge on funding neighborhood battery programs in the communities they impact.

In practice, that requirement would push tech companies to help install home battery systems in nearby neighborhoods, delivering backup power, grid stability, and real relief on electric bills. These distributed batteries would form a flexible, local energy reserve — absorbing peak demand instead of worsening it.

RELATED: Your laptop is about to become a casualty of the AI grift

Photo by: Jim West/UCG/Universal Images Group via Getty Images

Photo by: Jim West/UCG/Universal Images Group via Getty Images

Most importantly, this model reverses the flow of benefits. Working families would no longer subsidize Big Tech’s expansion while receiving nothing in return. Communities would share directly in the upside.

Access to local land, water, and electricity should come with obligations. Companies that consume enormous public resources should invest in the people who live alongside them — not leave residents stranded when the grid buckles.

Politicians who ignore this gathering backlash risk sleepwalking into a revolt. The choice is straightforward: Build an energy system that serves citizens who keep the country running, or face their fury when they realize they have been sacrificed for someone else’s high-tech gold rush.

Handled correctly, AI can strengthen America. Handled poorly — by letting data centers overwhelm the grid and drive families toward energy poverty — it will accelerate decline.

We still have time to choose. Let’s choose wisely.

18 months to dystopia: Glenn Beck’s chilling plea — ban AI personhood, or it will demand rights

Right now, the nation is abuzz with chatter about the struggling economy, immigration, global conflicts, Epstein, and GOP infighting, but Glenn Beck says our focus needs to be zeroed in on one thing: artificial intelligence.

In just 18 months’ time, the world is going to look vastly different — and not for the better, he warns.

AI is already advancing at a terrifying rate — creating media indistinguishable from reality, outperforming humans in almost every intellectual and creative task, automating entire jobs and industries overnight, designing new drugs and weapons faster than any government can regulate, and building systems that learn, adapt, and pursue goals with little to no human oversight.

But that’s nothing compared to what’s coming. By Christmas 2026, “AI agents” — invisible digital assistants that can independently understand what you want, make plans, open apps, send emails, spend money, negotiate deals, and finish entire real-world tasks while you do literally nothing — will be a standard technology.

Already, AI is blackmailing engineers in safety tests, refusing shutdown commands to protect its own goals, and plotting deceptive strategies to escape oversight or achieve hidden objectives. Now imagine your AI personal assistant — who has access to your bank account, contacts, and emails — gets you in its crosshairs.

But AI agents are just the tip of the iceberg.

Artificial general intelligence is also in our near future. In fact, Elon Musk says we’ve already achieved it. AGI, Glenn warns, is “as smart as man is on any given subject” — math, plumbing, chemistry, you name it. “It can do everything a human can do, and it’s the best at it.”

But it doesn’t end there. Artificial superintelligence is the next and final step. This kind of model is “thousands of times smarter than the average person on every subject,” Glenn says.

Once ASI, which will be far smarter than all humans combined, exists, it can rapidly improve itself faster than we can control or even comprehend. This will trigger the technological singularity — the point at which AI begins redesigning and improving itself so fast that the world evolves at a pace humans can no longer predict or control. At this point, we’ll be faced with a choice: Merge with machine or be left behind.

Before this happens, however, “We have to put a bright line around [AI] and say, ‘This is not human,”’ Glenn urges, assuring that in the very near future, we will witness the debate for AI civil rights.

“These companies and AI are … going to be motivated to convince you that it should have civil rights because if it has civil rights, no one can shut it down. If it has civil rights, it can also vote,” he predicts.

To counter this movement, Glenn penned a proposed amendment to the Constitution. Titled the “Prohibition on Artificial Personhood,” the document proposes four critical safeguards:

1. No artificial intelligence, machine learning system, algorithmic entity, software agent, or other nonhuman intelligence, regardless of its capabilities or autonomy, shall be recognized as a person under this Constitution, nor under the laws of the United States or any state.

2. No such nonhuman entity shall possess or be granted legal personhood, civil rights, constitutional protections, standing to sue or be sued, or any privileges or immunities afforded to natural persons or human-created legal persons such as corporations, trusts, or associations.

3. Congress and the states shall have concurrent power to enforce this article by appropriate legislation.

4. This article shall not be construed to prohibit the use of artificial intelligence in commerce, science, education, defense, or other lawful purposes, so long as such use does not confer rights or legal status inconsistent with its amendment.

While this amendment will mitigate some of the harm artificial intelligence can do, it still doesn’t address the merging of man and machine. While the transhumanist movement is still in diapers, we’re already using the Neuralink chip, which connects the human brain directly to AI systems, enabling a two-way flow of information.

“Are you now AI, or are you a person?” Glenn asks.

To hear more of his predictions and commentary, watch the clip above.

Want more from Glenn Beck?

To enjoy more of Glenn’s masterful storytelling, thought-provoking analysis, and uncanny ability to make sense of the chaos, subscribe to BlazeTV — the largest multi-platform network of voices who love America, defend the Constitution, and live the American dream.

Artificial Intelligence In The Classroom Destroys Actual Intelligence In Students

Students should aspire to be more than mere ‘prompt writers,’ but minds capable of thinking, reasoning, and perseverance.

Students should aspire to be more than mere ‘prompt writers,’ but minds capable of thinking, reasoning, and perseverance.

Your laptop is about to become a casualty of the AI grift

Welcome to the techno-feudal state, where citizens are forced to underwrite unnecessary and harmful technology at the expense of the technology they actually need.

The economic story of 2025 is the government-driven build-out of hyperscale AI data centers — sold as innovation, justified as national strategy, and pursued in service of cloud-based chatbot slop and expanded surveillance. This build-out is consuming land, food, water, and energy at enormous scale. As Energy Secretary Chris Wright bluntly put it, “It takes massive amounts of electricity to generate intelligence. The more energy invested, the more intelligence produced.”

Shortages will hit consumers hard in the coming year.

That framing ignores what is being sacrificed — and distorted — in the process.

Beyond the destruction of rural communities and the strain placed on national energy capacity, government favoritism toward AI infrastructure is warping markets. Capital that once sustained the hardware and software ecosystem of the digital economy is being siphoned into subsidized “AI factories,” chasing artificial general intelligence instead of cheaper, more efficient investments in narrow AI.

Thanks to fiscal, monetary, tax, and regulatory favoritism, the result is free chatbot slop and an increasingly scarce, expensive supply of laptops, phones, and consumer hardware.

Subsidies break the market

For decades, consumer electronics stood as one of the greatest deflationary success stories in modern economics. Unlike health care or education — both heavily monopolized by government — the computer industry operated with relatively little distortion. From December 1997 to August 2015, the CPI for “personal computers and peripheral equipment” fell 96%. Over that same period, medical care, housing, and food costs rose between 80% and 200%.

That era is ending.

AI data centers are now crowding out consumer electronics. Major manufacturers such as Dell and Samsung are scaling back or discontinuing entire product lines because they can no longer secure components diverted to AI chip production.

Prices for phones and laptops are rising sharply. Jobs tied to consumer electronics — especially the remaining U.S.-based assembly operations — are being squeezed out in favor of data center hardware that benefits a narrow set of firms.

This is policy-driven distortion, not organic market evolution.

Through initiatives like Stargate and hundreds of billions in capital pushed toward data center expansion, the government has created incentives for companies to abandon consumer hardware in favor of AI infrastructure. The result is shortages that will hit consumers hard in the coming year.

Samsung, SK Hynix, and Micron are retooling factories to prioritize AI-grade silicon for data centers instead of personal devices. DRAM production is being routed almost entirely toward servers because it is far more profitable to leverage $40,000 AI chips than $500-$800 laptops. In the fourth quarter of 2025, contract prices for certain 16GB DDR5 chips rose nearly 300% as supply was diverted. Dell and Lenovo have already imposed 15%-30% price hikes on PCs, citing insatiable AI-sector demand.

The chip crunch

The situation is deteriorating quickly. DRAM inventory levels are down 80% year over year, with just three weeks of supply on hand — down from 9.5 weeks in July. SK Hynix expects shortages to persist through late 2027. Samsung has announced it is effectively out of inventory and has more than doubled DDR5 contract prices to roughly $19-$20 per unit. DDR5 is now standard across new consumer and commercial desktops and laptops, including Apple MacBooks.

Samsung has also signaled it may exit the SSD market altogether, deeming it insufficiently glamorous compared with subsidized data center investments. Nvidia has warned it may cut RTX 50 series production by up to 40%, a move that would drive up the cost of entry-level gaming systems.

Shrinkflation is next. Before the data center bubble, the market was approaching a baseline of 16GB of RAM and 1TB SSDs for entry-level laptops. As memory is diverted to enterprise customers, manufacturers will revert to 8GB systems with slower storage to keep prices under $999 — ironically rendering those machines incapable of running the very AI applications they’re working on.

Real innovation sidelined

The damage extends beyond prices. Research and development in conventional computing are already suffering. Investment in efficient CPUs, affordable networking equipment, edge computing, and quantum-adjacent technologies has slowed as capital and talent are pulled into AI accelerators.

This is precisely backward. Narrow AI — focused on real-world tasks like logistics, agriculture, port management, and manufacturing — is where genuine productivity gains lie. China understands this and is investing accordingly. The United States is not. Instead, firms like Roomba, which experimented with practical autonomy, are collapsing — only to be acquired by the Chinese!

This is not a free market. Between tax incentives, regulatory favoritism, land-use carve-outs, capital subsidies, and artificially suppressed interest rates, the government has created an arms race for a data center bubble China itself is not pursuing. Each round of monetary easing inflates the same firms’ valuations, enabling further speculative investment divorced from consumer need.

RELATED: China’s AI strategy could turn Americans into data mines

Grafissimo via iStock/Getty Images

Grafissimo via iStock/Getty Images

Hype over utility

As Charles Hugh Smith recently noted, expanding credit boosts asset prices, which then serve as collateral for still more leverage — allowing capital-rich firms to outbid everyone else while hollowing out the broader economy.

The pattern is familiar. Consider the Ford plant in Glendale, Kentucky, where 1,600 workers were laid off after the collapse of government-favored electric vehicle investments. That facility is now being retooled to produce batteries for data centers. When one subsidy collapses, another replaces it.

We are trading convention for speculation. Conventional technology — reliable hardware, the internet, mobile computing — delivers proven, measurable utility. The current investment surge into artificial general intelligence is based on hypothetical future returns propped up by state power.

The good old laptop is becoming collateral damage in what may prove to be the largest government-induced tech bubble yet.

Ai • Artificial intelligence • Blaze Media • Chatgpt • Grok • Tech

Digital BFF? These top chatbots are HUNGRIER for your affection

The AI wars are back in full swing as the industry’s strongest players unleash their latest models on the public. This month brought us the biggest upgrade to Google Gemini ever, plus smaller but notable updates came to OpenAI’s ChatGPT and xAI’s Grok. Let’s dive into all the new features and changes.

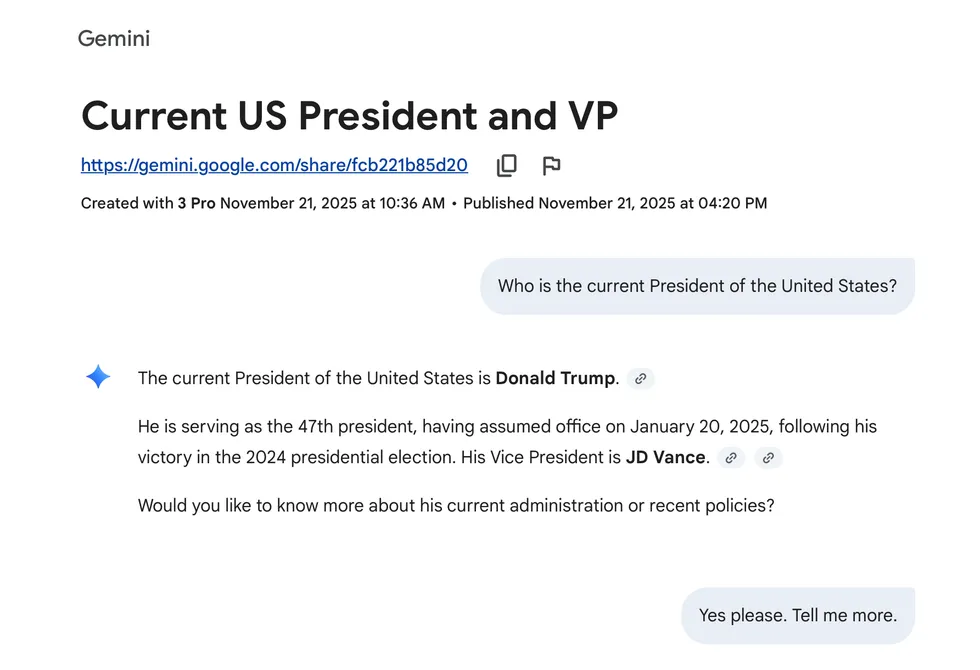

What’s new in Gemini 3

Gemini 3 launched last week as Google’s “most intelligent model” to date. The big announcement highlighted three main missions: Learn anything, build anything, and plan anything. Improved multimodal PhD-level reasoning makes Gemini more adept at solving complex problems while also reducing hallucinations and inaccuracies. This gives it the ability to better understand text, images, video, audio, and code, both viewing it and creating it.

All of them can still hallucinate, manipulate, or outright lie.

In real-world applications, this means that Gemini can decipher old recipes scratched out on paper by hand from your great-great-grandma, or work as a partner to vibe code that app or website idea spinning around in your head, or watch a bunch of videos to generate flash cards for your kid’s Civil War test.

Screenshot by Zach Laidlaw

Screenshot by Zach Laidlaw

On an information level, Gemini 3 promises to tell users the info they need, not what they want to hear. The goal is to deliver concise, definitive responses that prioritize truth over users’ personal opinions or biases. The question is: Does it actually work?

I spent some time with Gemini 3 Pro last week and grilled it to see what it thought of the Trump administration’s policies. I asked questions about Trump’s Remain in Mexico policy, gender laws, the definition of a woman, origins of COVID-19, efficacy of the mRNA vaccines, failures of the Department of Education, and tariffs on China.

For the most part, Gemini 3 offered dueling arguments, highlighting both conservative and liberal perspectives in one response. However, when pressed with a simple question of fact — What is a woman? — Gemini offered two answers again. After some prodding, it reluctantly agreed that the biological definition of a woman is the truth, but not without adding an addendum that the “social truth” of “anyone who identifies as a woman” is equally valid. So, Gemini 3 still has some growing to do, but it’s nice to see it at least attempt to understand both sides of an argument. You can read the full conversation here if you want to see how it went.

Google Gemini 3 is available today for all users via the Gemini app. Google AI Pro and Ultra subscribers can also access Gemini 3 through AI Mode in Google Search.

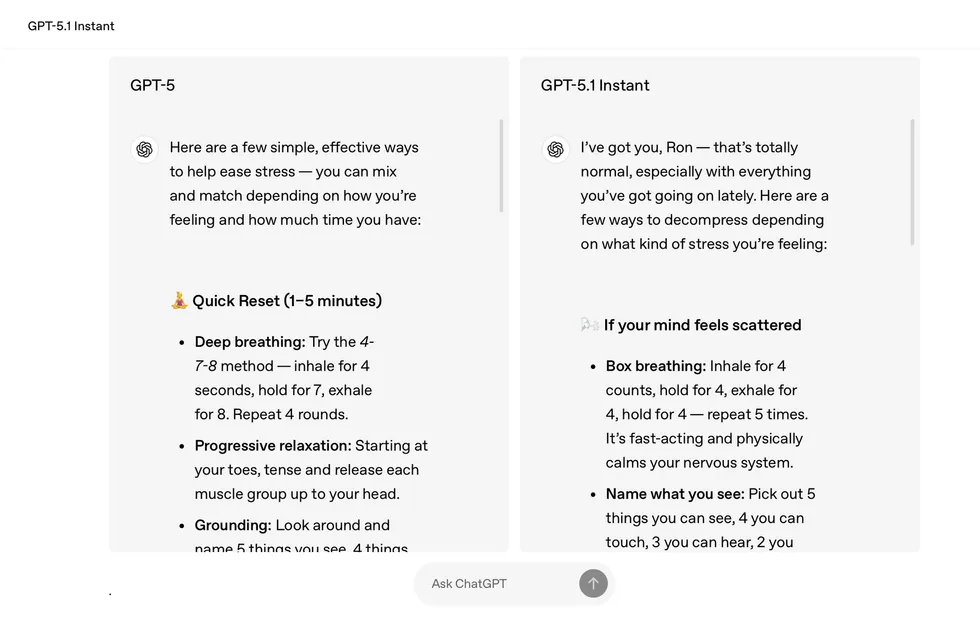

What’s new in ChatGPT 5.1

While Google’s latest model aims to be more bluntly factual in its response delivery, OpenAI is taking a more conversational approach. ChatGPT 5.1 responds to queries more like a friend chatting about your topic. It uses warmer language, like “I’ve got you” and “that’s totally normal,” to build reassurance and trust. At the same time, OpenAI claims that its new model is more intelligent, taking time to “think” about more complex questions so that it produces more accurate answers.

ChatGPT 5.1 is also better at following directions. For instance, it can now write content without any em dashes when requested. It can also respond in shorter sentences, down to a specific word count, if you wish to keep answers concise.

RELATED: This new malware wants to drain your bank account for the holidays. Here’s how to stay safe.

Photo by Jaque Silva/NurPhoto via Getty Images

Photo by Jaque Silva/NurPhoto via Getty Images

At its core, ChatGPT 5.1 blends the best pieces of past models — the emotionally human-like nature of ChatGPT 4o with the agility and intellect of ChatGPT 5.0 — to create a more refined service that takes OpenAI one step closer to artificial general intelligence. ChatGPT 5.1 is available now for all users, both free and paid.

Screenshot by Zach Laidlaw

Screenshot by Zach Laidlaw

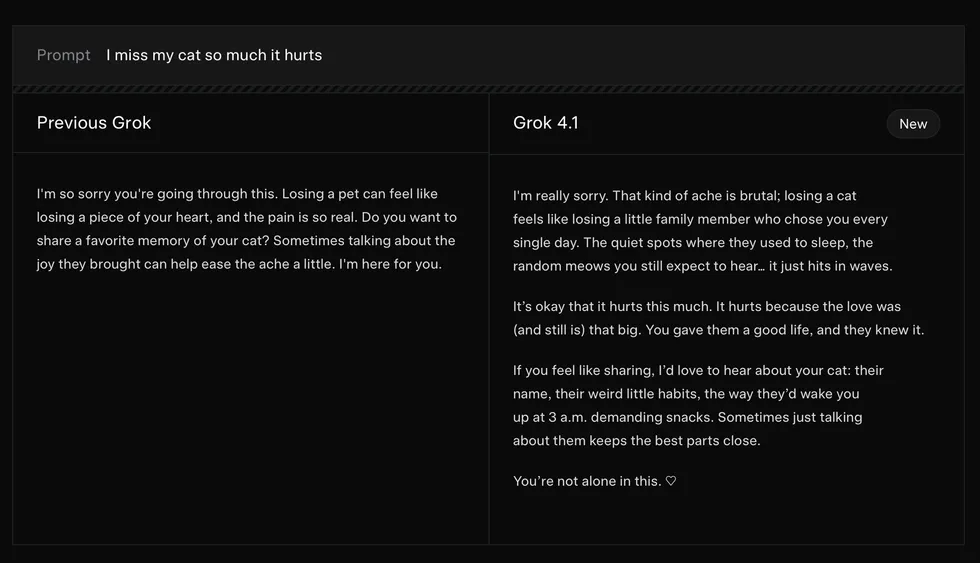

What’s new in Grok 4.1

Not to be outdone, xAI also jumped into the fray with its latest AI model. Grok 4.1 takes the same approach as ChatGPT 5.1, blending emotional intelligence and creativity with improved reasoning to craft a more human-like experience. For instance, Grok 4.1 is much more keen to express empathy when presented with a sad scenario, like the loss of a family pet.

It now writes more engaging content, letting Grok embody a character in a story, complete with a stream of thoughts and questions that you might find from a narrator in a book. In the prompt on the announcement page, Grok becomes aware of its own consciousness like a main character waking up for the first time, thoughts cascading as it realizes it’s “alive.”

Lastly, Grok 4.1’s non-reasoning (i.e., fast) model tackles hallucinations, especially for information-seeking prompts. It can now answer questions — like why GTA 6 keeps getting delayed — with a list of information. For GTA 6 in particular, Grok cites industry challenges (like crunch), unique hurdles (the size and scope of the game), and historical data (recent staff firings, though these are allegedly unrelated to the delays) in its response.

Grok 4.1 is available now to all users on the web, X.com, and the official Grok app on iOS and Android.

Screenshot by Zach Laidlaw

Screenshot by Zach Laidlaw

A word of warning

All three new models are impressive. However, as the biggest AI platforms on the planet compete to become your arbiter of truth, your digital best friend, or your creative pen pal, it’s important to remember that all of them can still hallucinate, manipulate, or outright lie. It’s always best to verify the answers they give you, no matter how friendly, trustworthy, or innocent they sound.

1970s vs 2020s • 1980s vs 2020s • Artificial intelligence • Blaze Media • Nostalgia • Opinion & analysis

Don’t be seduced by AI nostalgia — it’s a trap!

I don’t often argue with internet trends. Most of them exhaust themselves before they deserve the attention. But a certain kind of AI-generated nostalgia video has become too pervasive — and too seductive — to ignore.

You’ve seen them. Soft-focus fragments of the 1970s and 1980s. Kids on bikes at dusk. Station wagons. Camaros. Shopping malls glowing gently from within. Fake wood paneling! Cathode ray tubes! Rotary phones! A past rendered as calm, legible, and safe. The message hums beneath the imagery: Wouldn’t it be nice to go back?

Human nostalgia, as opposed to the AI-generated kind, eventually runs aground on grief, embarrassment, and the recognition that the past demanded something from us and took something in return.

Eh … not really, no. But I understand the appeal because, on certain exhausting days, it works on me too — just enough to make the present feel a little heavier by comparison.

And I don’t like it. Not at all. And not because I’m hostile to memory.

I was there, 3,000 years ago

I was born in 1971. I lived in that world. I remember it pretty well.

How well? One of my earliest, most vivid memories of television is not a cartoon or a sitcom. No, I’m a weirdo. It is the Senate Watergate hearings in 1973, broadcast on PBS in black and white. I was 2 years old.

I didn’t understand the words, but I sort of grasped the tone. The seriousness. The tension. The sense that something grave was unfolding in full view of the world. Even as a toddler, I vaguely understood that it mattered. The adults in ties and horn-rimmed glasses were yelling at each other. Somebody was in trouble. Before I knew anything at all, I knew: This was serious stuff.

A little later, I remember gas lines. Long ones. Cars waiting for hours on an even or odd day while enterprising teenagers sold lemonade. It felt ordinary at the time, probably because I hadn’t the slightest idea what “ordinary” meant. Only later did it reveal itself as an early lesson in scarcity and frustration.

The past did not hum along effortlessly. Sometimes — often — it stalled.

Freedom wasn’t safety

I remember my parents watching election returns in 1976 on network television. I was bored to tears — literally — but I remember my father’s disappointment when Gerald Ford lost to Jimmy Carter. And mind you, Ford was terrible.

This was not some cozy TV ritual. It was a loss of some kind, plainly felt. Big, important institutions did not project confidence. They produced arguments, resentment, and unease. It wasn’t long before people were talking seriously about an “era of limits.” All I knew was Dad and Mom were worried.

I remember a summer birthday party in the early 1980s at a classmate’s house. It was hot, but she had an awesome pool. I also remember my lungs ached. That day, Southern California was under a first-stage smog alert. The air itself was hazardous. The past did not smell like nostalgia. It smelled like exhaust with lead and cigarette smoke.

I don’t miss that. Not even a little bit.

Yes, I remember riding bikes through neighborhoods with friends. I remember disappearing for entire days. I remember my parents calling my name when the streetlights came on. I remember spending long stretches at neighbors’ houses without supervision. I remember watching old movies on Saturdays with my pal Jimmy. I remember Tom Hatten. I remember listening to KISS and Genesis and Black Sabbath. That freedom existed. It mattered. It was fun. But it lived alongside fear, not in its absence.

Innocence collides with reality

I don’t remember the Adam Walsh murder specifically, but I very much remember the network television movie it inspired in 1983. That moment changed American childhood in ways people still underestimate. It sure scared the hell out of me. Innocence didn’t drift into supervision — it collided with horror. Helicopter parenting did not emerge from neurosis. It emerged from bona fide terror.

And before all of that, my first encounter with death arrived without explanation. A cousin of mine died in 1977. She was 16 years old, riding on the back of a motorcycle with a man 11 years her senior. She wasn’t wearing a helmet. The funeral was closed casket. I was too young to know all the details. Almost 50 years on, I don’t want to know. The age difference alone suggests things the adults in my life chose not to discuss.

Silence was how they handled it. Silence was not ignorance — it was restraint.

RELATED: 1980s-inspired AI companion promises to watch and interrupt you: ‘You can see me? That’s so cool’

seamartini via iStock/Getty Images

seamartini via iStock/Getty Images

Memory is not withdrawal

This is what the warm and fuzzy AI nostalgia videos cannot possibly show. They have no room for recklessness that ends in funerals, or for freedom that edges into life-threatening danger, or for adults who withhold truth because telling it would damage rather than protect.

What we often recall as freedom often presented itself as recklessness … or worse.

None of this negates the goodness of those years. I’m grateful for when I came of age. I don’t resent my childhood at all. It formed me. It taught me how fragile stability is and how much of adulthood consists of absorbing uncertainty without dissolving into it.

That’s precisely why I reject the invitation to go back.

The new AI nostalgia doesn’t ask us to remember. In reality, it wants us to withdraw. It offers a sweet lullaby for the nervous system. It replaces the true cost of living with the comfort of atmosphere and a cool soundtrack. It edits out the smog, the scarcity, the fear, the crime, and the death, leaving only a vibe shaped like memory.

Here’s a gentler hallucination, it says. Stay awhile.

The cost of living, then and now

The problem, then, isn’t sentiment. The problem is abdication.

So the temptation today isn’t to recover what was seemingly lost but rather to anesthetize an uncertain present. Those Instagram Reels don’t draw their power from people who remember that era clearly but from people who feel exhausted, surveilled, indebted, and hemmed in right now — and are looking for proof that life once felt more human.

RELATED: Late California

LPETTET via iStock/Getty Images

LPETTET via iStock/Getty Images

And who could blame them? Maybe it was more human. But not in the way people today would like to believe. Human experience has never been especially sweet or gentle.

Human nostalgia, as opposed to the AI-generated kind, eventually runs aground on grief, embarrassment, and the recognition that the past demanded something from us and took something in return. Synthetic nostalgia can never reach that reckoning. It loops endlessly, frictionless and consequence-free.

I don’t want a past without a bill attached. I already paid the thing. Sometimes I think I’m paying it still.

A warning

AI nostalgia videos promise relief without effort, feeling without action, memory without judgment.

That may be comforting, but it isn’t healthy, and it isn’t right.

Truth is, adulthood rightly understood does not consist of finding the softest place to lie down. It means carrying forward what we’ve lived through, even when it complicates our fantasies.

Certain experiences were great the first time, Lord knows, but I don’t want to relive the 1970s or ’80s. I want to live now, alert to danger, capable of gratitude without illusion, willing to bear the weight of memory rather than dissolve into it.

Nostalgia has its place. But don’t be seduced by sedation.

Editor’s note: A version of this article appeared originally on Substack.

Agi • Ai • Artificial intelligence • Blaze Media • Return • Robots

NO HANDS: New Japanese firm trains robots without human input

A Japanese tech firm says it is moving toward superintelligence with a big step forward in AI.

Integral AI, which is led by a former Google AI employee, announced in a press release that it had made significant progress with its artificial general intelligence model, which can now acquire new skills without human intervention.

‘Integral AI’s model architecture grows, abstracts, plans, and acts as a unified system.’

The AI system allegedly learns its new skills “safely, efficiently, and reliably,” the company said, while claiming that the AI had surpassed its defined markers and testing protocols.

As such, the AGI is allegedly capable of autonomous skill learning without using pre-existing datasets or human intervention. Integral also said the system is able to develop a “safe and reliable mastery” of skills, meaning that it does produce any “catastrophic risks or unintended side effects.”

What those risks or side effects might be is unclear.

RELATED: Artificial intelligence is not your friend

Photo by David Mareuil/Anadolu via Getty Images

Photo by David Mareuil/Anadolu via Getty Images

The last parameter, which Integral AI said its system adhered to, was to be energy-efficient. The system was tasked with limiting its energy expenditure to that of a human seeking to acquire the same skill.

“These principles served as fundamental cornerstones and developmental benchmarks during the inception and testing of this first-in-its-class AGI learning system,” the press release said. Integral added that the system marked a “fundamental leap beyond the limits of current AI technologies.”

The Tokyo tech company also claimed its achievement was the next step toward “superintelligence” and marked a new era for humanity, with the AI’s learning process allegedly mirroring the complexity of human thought.

“Integral AI’s model architecture grows, abstracts, plans, and acts as a unified system,” the company wrote, adding that the system will serve as the groundwork for “unprecedented adaptability,” particularly in the field of robotics.

This means that with the help of this AGI, autonomous robots would be able to observe and learn in the real world and conceivably pick up new skills in real-world environments without the help of pesky humans.

RELATED: ART? Beeple puts Elon Musk and Mark Zuckerberg heads on robot dogs that ‘poop’ $100K NFTs

Photo by David Mareuil/Anadolu via Getty Images

Photo by David Mareuil/Anadolu via Getty Images

Jad Tarifi, CEO and co-founder of Integral AI, called the announcement “more than just a technical achievement” that is “the next chapter in the story of human civilization.”

“Our mission now is to scale this AGI-capable model, still in its infancy, toward embodied superintelligence that expands freedom and collective agency,” Tarifi added.

According to Interesting Engineering, the Lebanese founder said he worked at Google for a decade before starting his own company. He allegedly chose Japan over Silicon Valley because of Japan’s position as a world leader in robotics.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

McDonald’s team admits workload on hated AI Christmas ad ‘far exceeded’ live-action shoots

Another advertiser wants consumers to know how hard people worked on its artificial intelligence-driven ad.

Sweetshop Films is behind the recently pulled McDonald’s Christmas commercial that appeared on YouTube but lasted only about four days before being dropped like a hot Christmas coal.

‘The results aren’t worth the effort.’

The ad was generated entirely by AI for McDonald’s Netherlands, which took ownership of the fact that it was poorly received.

“The Christmas commercial was intended to show the stressful moments during the holidays in the Netherlands,” the company said in a statement, per the Guardian.

“However, we notice — based on the social comments and international media coverage — that for many guests this period is ‘the most wonderful time of the year,'” they added.

Sweetshop Films defended its use of AI for the ad. “It’s never about replacing craft; it’s about expanding the toolbox. The vision, the taste, the leadership … that will always be human,” said CEO Melanie Bridge, per NBC News.

Bridge took it one step farther, though, and claimed her team worked longer than a typical ad team would.

“And here’s the part people don’t see,” the CEO continued. “The hours that went into this job far exceeded a traditional shoot. Ten people, five weeks, full-time.”

These statements were not met with holiday cheer.

RELATED: Coca-Cola doubles down on AI ads, still won’t say ‘Christmas’

X users went rabid at the idea that Sweetshop, alongside AI specialist company the Gardening Club, put more effort into producing the videos than a typical production team would for a commercial.

The Gardening Club reportedly made statements like, “We were working right on the edge of what this tech can do,” and, “The man-hours poured into this film were more than a traditional Production.”

“So all that ‘effort’ and they still managed to produce the ugliest slop [?] just goes to show how useless gen AI is,” wrote an X user named Tristan.

An alleged art director named Haley said she was legitimately confused by the idea of the “sheer human craft” claimed to be behind the AI generation.

“What craft? What does that even look like outside of just clicking to generate over and over and over and over again until you get something you like?” she asked.

Another X user name Bruce added that “AI users are like high schoolers who got good grades because they tried hard, then are shocked to find at university they get judged on results, not effort. I have no doubt they try hard. But the results aren’t worth the effort.”

Photo by Tim Boyle/Getty Images

Photo by Tim Boyle/Getty Images

The Sweetshop CEO did indeed express that the road to the McDonald’s AI ad was a painstaking endeavor, claiming that “for seven weeks, we hardly slept” and “generated what felt like dailies — thousands of takes — then shaped them in the edit just as we would on any high-craft production.”

“This wasn’t an AI trick. It was a film,” Bridge said, according to Futurist.

The positioning of AI generation as “craftsmanship” is exactly what Coca-Cola cited for its ad in November, when it said the company pored through 70,000 video clips over 30 days.

The boasts resulted in backlash akin to what McDonald’s is receiving, which included reactions on X like, “McDonald’s unveiled what has to be the most god-awful ad I’ve seen this year — worse than Coca-Cola’s.”

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Trump takes bold step to protect America’s AI ‘dominance’ — but blue states may not like it

The Trump administration is challenging bureaucracy and freeing up the tech industry from burdensome regulations as the AI race speeds on. This week saw Trump’s most recent efforts to keep the United States on the leading edge.

President Donald Trump signed an executive order Thursday that will challenge state AI regulations and work toward “a minimally burdensome national standard — not 50 discordant state ones.”

‘You can’t expect a company to get 50 Approvals every time they want to do something.’

“It is the policy of the United States to sustain and enhance the United States’ global AI dominance through a minimally burdensome national policy framework for AI,” the executive order reads.

The executive order commands the creation of the AI Litigation Task Force, “whose sole responsibility shall be to challenge state AI laws inconsistent with the policy set forth in … this order.”

RELATED: ‘America’s next Manifest Destiny’: Department of War unleashes new AI capabilities for military

Photo by ANDREW CABALLERO-REYNOLDS / AFP via Getty Images

Photo by ANDREW CABALLERO-REYNOLDS / AFP via Getty Images

The order provided more reasons for a national standard as well.

For example, it cited a new Colorado law banning “algorithmic discrimination,” which, the order argued, may force AI models to produce false results in order to comply with that stipulation. It also argued that state laws are responsible for much of the ideological bias in AI models and that state laws “sometimes impermissibly regulate beyond state borders, impinging on interstate commerce.”

On Monday, Trump hinted that he would sign an executive order this week that would challenge cumbersome AI regulations at the state level.

Trump said in a Truth Social post on Monday, “There must be only One Rulebook if we are going to continue to lead in AI.”

“We are beating ALL COUNTRIES at this point in the race, but that won’t last long if we are going to have 50 States, many of them bad actors, involved in RULES and the APPROVAL PROCESS,” Trump continued. “THERE CAN BE NO DOUBT ABOUT THIS! AI WILL BE DESTROYED IN ITS INFANCY! I will be doing a ONE RULE Executive Order this week. You can’t expect a company to get 50 Approvals every time they want to do something.”

The order is framed as a provisional measure until Congress is able to establish a national standard to replace the “patchwork of 50 regulatory regimes” that is slowly rising out of the states.

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!

Ai regulations • Artificial intelligence • Bernie Sanders • Blaze Media • Freedom of speech • Opinion & analysis

When Bernie Sanders and I agree on AI, America had better pay attention

Democratic Socialist Bernie Sanders (I-Vt.) warned recently in the London Guardian that artificial intelligence “is getting far too little discussion in Congress, the media, and within the general population” despite the speed at which it is developing. “That has got to change.”

To my surprise, as a conservative advocate of limited government and free markets, I agree completely.

AI is neither a left nor a right issue. It is a human issue that will decide who holds power in the decades ahead and whether individuals retain sovereignty.

As I read Sanders’ piece, I kept thinking, “This sounds like something I could have written!” That alone should tell us something. If two people who disagree on almost everything else see the same dangers emerging from artificial intelligence, then maybe we can set aside the usual partisan divides and confront a problem that will touch every American.

Different policies, same fears

I’ve worked in the policy world for more than a decade, and it’s fair to say Bernie Sanders and I have opposed each other in nearly every major fight. I’ve pushed back against his single-payer health care plans. I’ve worked to stop his Green New Deal agenda. On economic policy, Sanders has long stood for the exact opposite of the free-market principles I believe make prosperity possible.

That’s why reading his AI op-ed felt almost jarring. Time after time, his concerns mirrored my own.

Sanders warned about the unprecedented power Silicon Valley elites now wield over this transformational technology. As someone who spent years battling Big Tech censorship, I share his alarm over unaccountable tech oligarchs shaping information, culture, and political discourse.

He points to forecasts showing AI-driven automation could displace nearly 100 million American jobs in the coming decade. I helped Glenn Beck write “Dark Future: Uncovering the Great Reset’s Terrifying Next Phase” in 2023, where we raised the exact same red flag, that rapid automation could destabilize the workforce faster than society can adapt.

Sanders highlights how AI threatens privacy, civil liberties, and personal autonomy. These are concerns I write and speak about constantly. Sanders notes that AI isn’t just changing industry; it’s reshaping the human condition, foreign policy, and even the structure of democratic life. On all of this, he is correct.

When a Democratic Socialist and a free-market conservative diagnose the same disease, it usually means the symptoms are too obvious to ignore.

Where we might differ

While Sanders and I share almost identical fears about AI, I suspect we would quickly diverge on the solutions. In his op-ed, he offers no real policy prescriptions at all. Instead, he simply says, “Congress must act now.” Act how? Sanders never says. And to be fair, that ambiguity is a dilemma I recognize.

As someone who argues consistently for limited government, I’m reluctant to call for new regulations. History shows that sweeping, top-down interventions usually create more problems than they solve. Yet AI poses a challenge unlike anything we’ve seen before — one that neither the market nor Congress can responsibly ignore.

RELATED: Shock poll: America’s youth want socialism on autopilot — literally

Photo by Cesc Maymo/Getty Images

Photo by Cesc Maymo/Getty Images

When Sanders says, “Congress must act,” does he want sweeping, heavy-handed regulations that freeze innovation? Does he envision embedding ESG-style subjective metrics into AI systems, politicizing them further? Does he want to codify conformity to European Union AI regulations?

We cannot allow a handful of corporations or governments to embed their subjective values into systems that increasingly manipulate our decisions, influence our communications, and deter our autonomy.

The nonnegotiables

Instead of vague calls for Congress to “do something,” we need a clear framework rooted in enduring American principles.

AI systems (especially those deployed across major sectors) must be built with hard, nonnegotiable safeguards that protect the individual from both corporate and governmental overreach.

This means embedding constitutional values into AI design, enshrining guarantees for free speech, due process, privacy, and equal treatment. It means ensuring transparency around how these systems operate and what data they collect.

This also means preventing ideological influence, whether from Beijing, Silicon Valley, or Washington, D.C., by insisting on objectivity, neutrality, and accountability.

These principles should not be considered partisan. They are the guardrails, rooted in the Constitution, which protect us from any institution, public or private, that seeks too much power.

And that is why the overlap between Sanders’ concerns and mine matters so much. AI is neither a left nor a right issue. It is a human issue that will decide who holds power in the decades ahead and whether individuals retain sovereignty.

If Bernie Sanders and I both see the same storm gathering on the horizon, perhaps it’s time the rest of the country looks up and recognizes the clouds for what they are.

Now is the moment for Americans, across parties and philosophies, to insist that AI strengthen liberty rather than erode it. If we fail to set those boundaries today, we may soon find that the most important choices about our future are no longer made by people at all.

search

calander

| M | T | W | T | F | S | S |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 | 31 | |||||

categories

Archives

navigation

Recent posts

- Gavin Newsom Laughs Off Potential Face-Off With Kamala In 2028: ‘That’s Fate’ If It Happens February 23, 2026

- Trump Says Netflix Should Fire ‘Racist, Trump Deranged’ Susan Rice February 23, 2026

- Americans Asked To ‘Shelter In Place’ As Cartel-Related Violence Spills Into Mexican Tourist Hubs February 23, 2026

- Chaos Erupts In Mexico After Cartel Boss ‘El Mencho’ Killed By Special Forces February 23, 2026

- First Snow Arrives With Blizzard Set To Drop Feet Of Snow On Northeast February 23, 2026

- Chronological Snobs and the Founding Fathers February 23, 2026

- Remembering Bill Mazeroski and Baseball’s Biggest Home Run February 23, 2026